Database migration using Apache NiFi

Introduction

Apache NiFi is a powerful tool for data migration. It provides an easy to use interface to connect to a database server and perform data ingestion and data extraction. This blog post explains a sample NiFi flow to migrate database tables from one database server (source database) to another database server (destination database) using Apache NiFi.

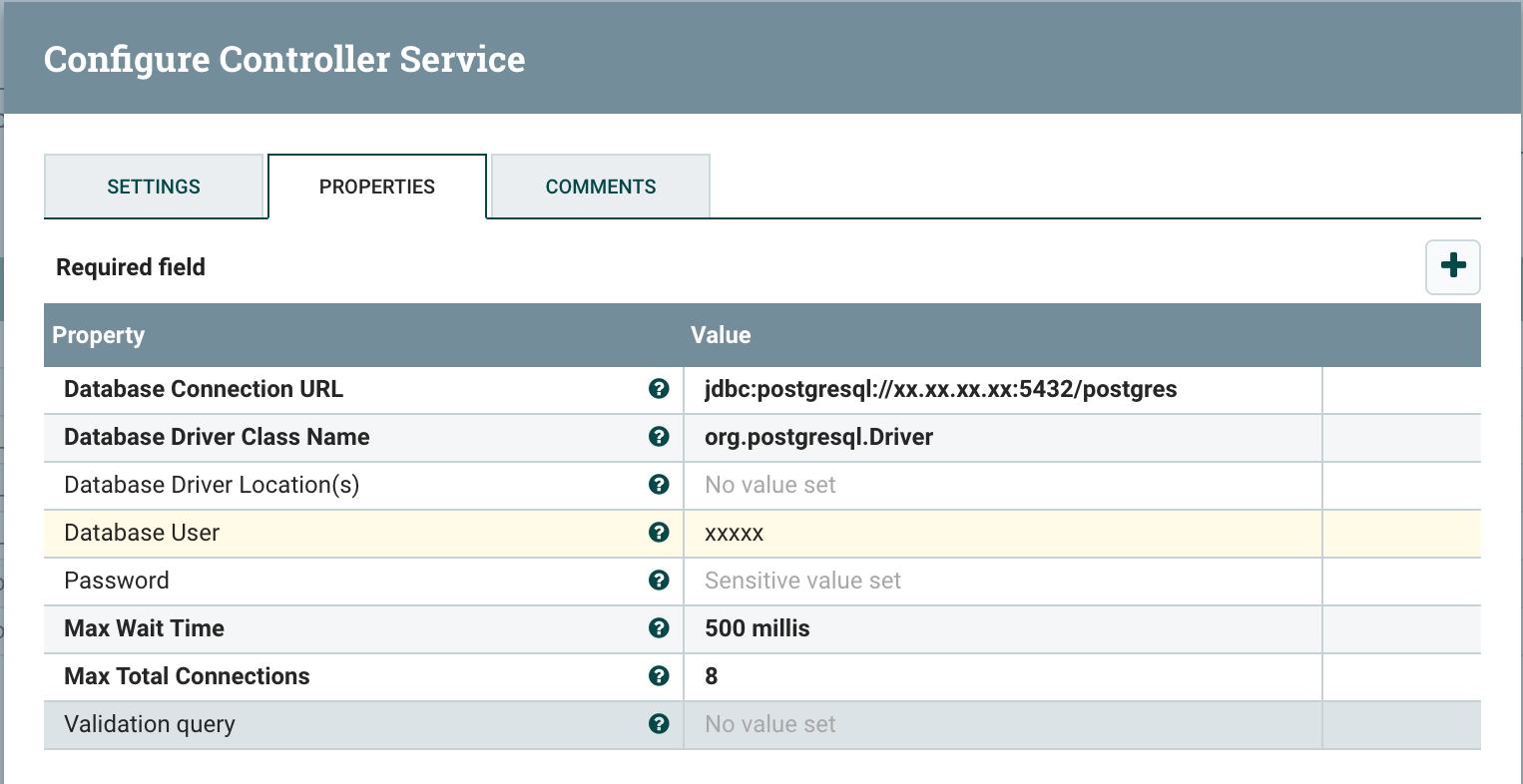

Database Connections

To start with, create two DBCPConnectionPool controller services, one for the source database and one for the destination database. Add database properties: ‘Database Connection URL’, ‘Database Driver Class Name’, ‘Database User’, and ‘Password’. Make sure that database driver jars are included in ‘nifi_home/lib/’. Alternatively, the location of database driver jars can also be added to the ‘Database Driver Location(s)’ properties.

NiFi Flow

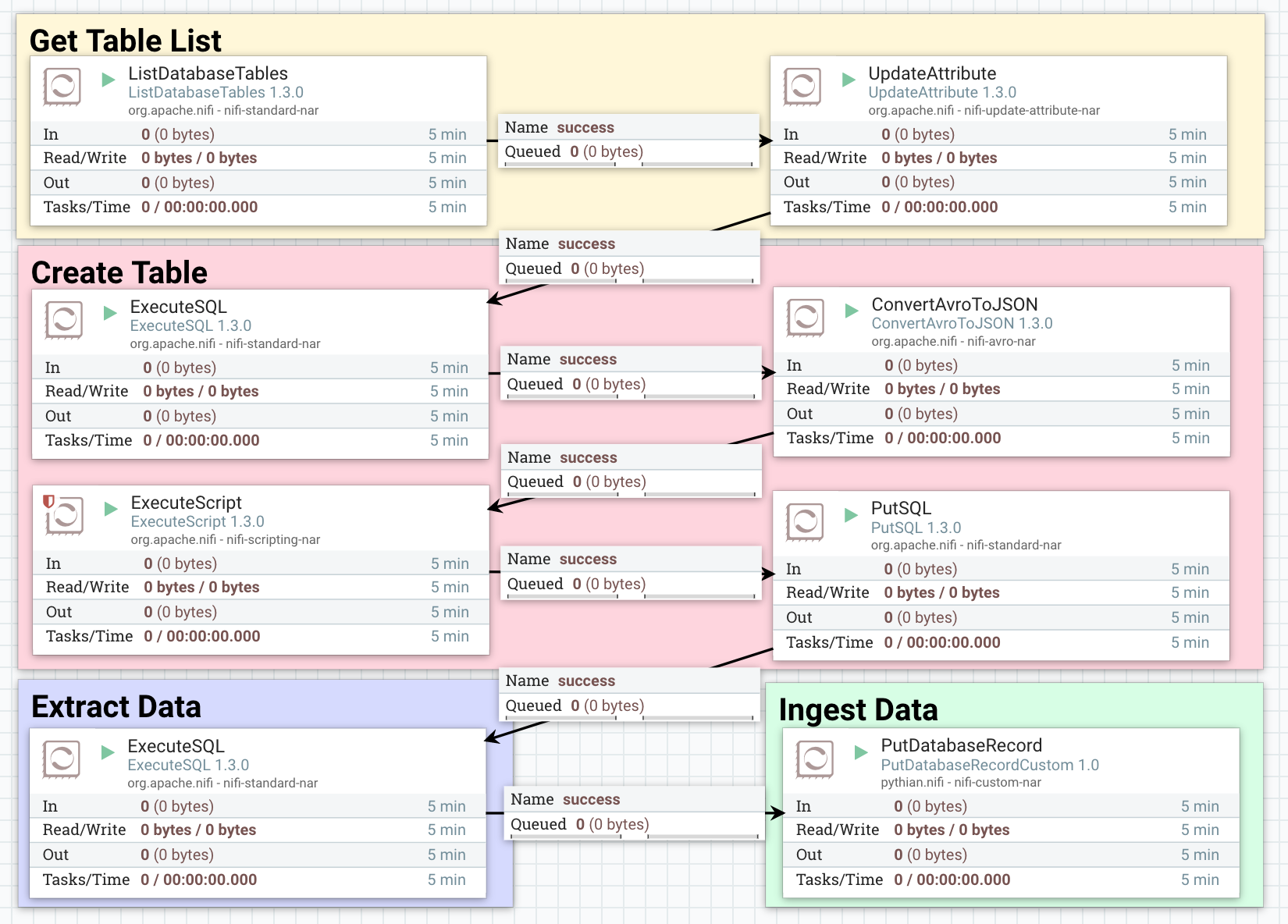

NiFi flow for database migration has four phases:

Get Table List

- ListDatabaseTables: There may be a need to migrate all tables or some of the tables only. So, the initial task is to list the tables to migrate. Use ListDatabaseTables processor to fetch the list of tables to be migrated. Edit the properties of the processor to connect to source database and filter out the list of tables. Properties: Catalog, Schema Pattern, Table Name Pattern and Table Types to filter out the list of tables. The processor outputs a single flowfile for each table in the list, and to each flowfile, adds the following properties: db.table.name, db.table.catalog, db.table.schema, db.table.name. This processor adds the details of source database and table associated with the flowfile.

- UpdateAttribute: The ListDatabaseTables processor adds the table properties for the source database system. To add the destination database properties, use UpdateAttrbiute processor. Add following properties: ‘destination_table_catalog’ and ‘destination_table_schema’. Table name will be same as in the source database (db.table.name).

Create Table

- ExecuteSQL: After each flowfile has the information of the source database, the destination database, and the table to migrate, the next step is to create the table in the destination database. Use the ExecuteSQL processor to fetch the table schema of the table from the source database. The SQL statement to fetch the table schema may vary as per the type of the source database. The ExecuteSQL outputs the Avro flowfile which contains table schema.

- ConvertAvroToJson: The Avro format flowfile is converted to Json format using the ConvertAvroToJson processor.

- ExecuteScript: Use ExecuteScript processor to generate Data Definition Language (DDL) statement to create table for the destination database. Through the ExecuteScript processor, scripting language such as python, groovy, ruby etc. can be used to read the Json format schema of the table and create the DDL statement.

- PutSQL: To execute the DDL statement to create table in the destination database, use PutSQL processor.

Extract Data

- ExecuteSQL: Use ExecuteSQL processor to fetch table data from the source database. Add the SQL select statement ‘select * from ${db.table.catalog}.${db.table.schema}.${db.table.name};’. This processor outputs the Avro format flowfile with all the data of the source table. Alternatively, GenerateTableFetch and QueryDatabase processors can be used to extract data, which will be discussed in the next blog post.

Ingest Data

- PutDatabaseRecord: The PutDatabaseRecord processor reads the Avro data, creates a batch upload and uploads the data to the table created in the destination table. Data ingestion can also be performed using ConvertAvroToJson and PutSQL processor. However, PutDatabaseRecord is better and faster way to upload data. PutSQL processor can possibly be used if the extracted data requires some transformations.

This blog post discussed elementary NiFi flow to migrate tables across the database. The upcoming blog post will cover details on handling large database tables, and other issues such as datatype inconsistencies etc. Hope this post helped you understand the NiFi flow and processors used to migrate database tables. If you have any questions or comments, do post them in the comments section.

Data Migration Services

Ready to migrate your data with confidence?

On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Using Kafka to Replicate Data from Postgres to MS SQL Server: Part 1

Using Kafka to Replicate Data from Postgres to MS SQL Server: Part 1

Apr 14, 2022 12:00:00 AM

9

min read

Database migration: how to handle large tables

![]()

Database migration: how to handle large tables

Jun 26, 2018 12:00:00 AM

3

min read

Your 9-Step Checklist for a Fast, Seamless Snowflake Migration

Your 9-Step Checklist for a Fast, Seamless Snowflake Migration

Apr 11, 2023 12:00:00 AM

6

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.