Understanding your Data Lifecycle for Better Data Management

Managing data is a lot harder than it sounds. It’s often stored in multiple locations. It can be structured, semi-structured or unstructured. It comes in a variety of formats. And it’s constantly growing.

Data lifecycle management (DLM) is a policy-based approach for managing data at each stage of its lifecycle, from creation to usage to deletion. This approach provides a holistic view of data, helping organizations capture insights and make data-driven decisions. It also makes it easier to detect data breaches and ensure regulatory compliance.

While plenty of DLM products exist on the market, data lifecycle management is not a product. Rather, it’s an approach to managing data through practices and procedures, enabled by tools. Understanding the data lifecycle can help you better manage data—at every stage of its useful life.

Why is DLM so important?

Data is your organization’s most valuable asset—and just like physical assets, data can be stolen, lost or misused. Data lifecycle management can help to mitigate security, privacy and compliance risks. For example, it can help you manage sensitive data, like ensuring access is revoked if an employee leaves the organization. It also helps to ensure that data is handled in compliance with privacy regulations.

DLM improves data quality, providing a single source of truth for consistency and accuracy across the organization. Ultimately, this provides a strong foundation for analytics and machine learning, making clean data quickly accessible for timely decision-making. And by removing data that is no longer needed, you can reduce your computing and storage costs.

Stages of the data lifecycle include:

Data collection: Initially, data is created and/or collected from SaaS tools, IoT devices, web events and other data sources, including spreadsheets and legacy systems.

Data ingestion: Data is moved from the source system to a central repository, such as a data warehouse or lake, where it’s stored and consolidated to establish a single source of truth.

Data transformation: Next, raw data is formatted, cleansed and restructured—turning it into a consistent, usable format.

Data activation: Once data has been transformed, it can be merged with other data sets, so users can start to query data and build models.

Data analytics: From here, data can be used in analytics, business intelligence and machine learning applications.

Data monitoring and auditing: Data is monitored to identify and resolve any issues, avoid downtime, ensure compliance, and control costs.

Archival and disposal: Once data is no longer needed for daily operations—but still needs to be accessible for compliance purposes—it can be archived in a long-term storage solution. When it has reached its end-of-life, secure disposal should follow data protection regulations.

Managing your data in Google Cloud

A range of Google Cloud products and services can help manage data throughout its lifecycle—based on your DLM policies and practices—while providing a foundation for machine learning and analytics. These include:

- Data ingestion: Pub/Sub can be used for ingesting and distributing data, while BigQuery Data Transfer Service automates data movement. When you create a new bucket in BigQuery, you can specify a retention policy and set an expiration date for datasets.

- Data collection and transformation: Services such as Cloud Storage and BigQuery help to collect, transform, and manage both structured and unstructured data. Lifecycle rules—based on DLM policies— can be triggered on conditions such as age, storage class, data, state, and version.

- Cloud SQL is a fully managed relational data management system (RDBMS) with MySQL and PostgreSQL engines.

- Cloud Spanner is a horizontally scalable RDBMS option.

- Datastore and Cloud Bigtable are NoSQL database options.

- Data activation: Data can be processed and activated with tools like Dataflow, which unifies stream and batch data processing, and Dataprep, which prepares data for machine learning and analytics.

- Data analytics: During this stage of the data lifecycle, you can explore and visualize data to extract insights for informed decision-making..

- BigQuery BI Engine serves as an in-memory analysis service for sub-second query response times.

- Looker is a business intelligence platform for governed, embedded and self-service BI.

- Dataproc can run open-source analytics at scale.

- Vertex AI allows you to build and deploy AI and generative machine learning models.

- Data monitoring and auditing: Cloud Composer can monitor pipelines across clouds, with built-in integration for Google Cloud services, including Cloud Storage, Datastore, Dataflow, Dataproc and Pub/Sub. This helps to ensure compliance with data management policies.

- Archival: Data can be archived for compliance or disaster recovery purposes in Cloud Storage, with options for nearline, coldline and archive storage.

- Disposal: Once a deletion request is made (and confirmed), Google eliminates data iteratively from application and storage layers, from both active and backup storage systems.

Taking the next step

Regardless of your maturity level with data management, it’s essential to review your policies and practices regularly. Update DLM practices as technologies and regulatory requirements change—and as your business evolves.

Need help? Pythian’s data management services for Google Cloud can help you find the right option—and migrate, manage and modernize your data—for the fastest time to value. Get in touch with a Pythian Google Cloud expert to see how our team can help.

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

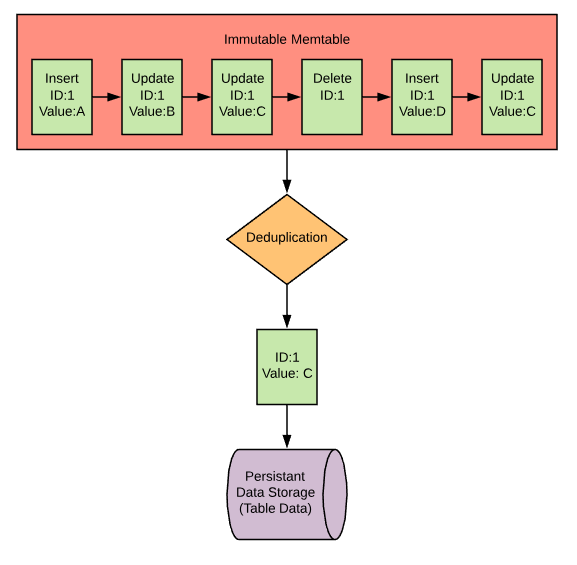

Exposing MyRocks Internals via system variables: Part 2, Initial Data Flushing

Exposing MyRocks internals via system variables: Part 1, Data Writing

Exposing MyRocks Internals via system variables: Part 4, Compression and Bloom Filters

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.