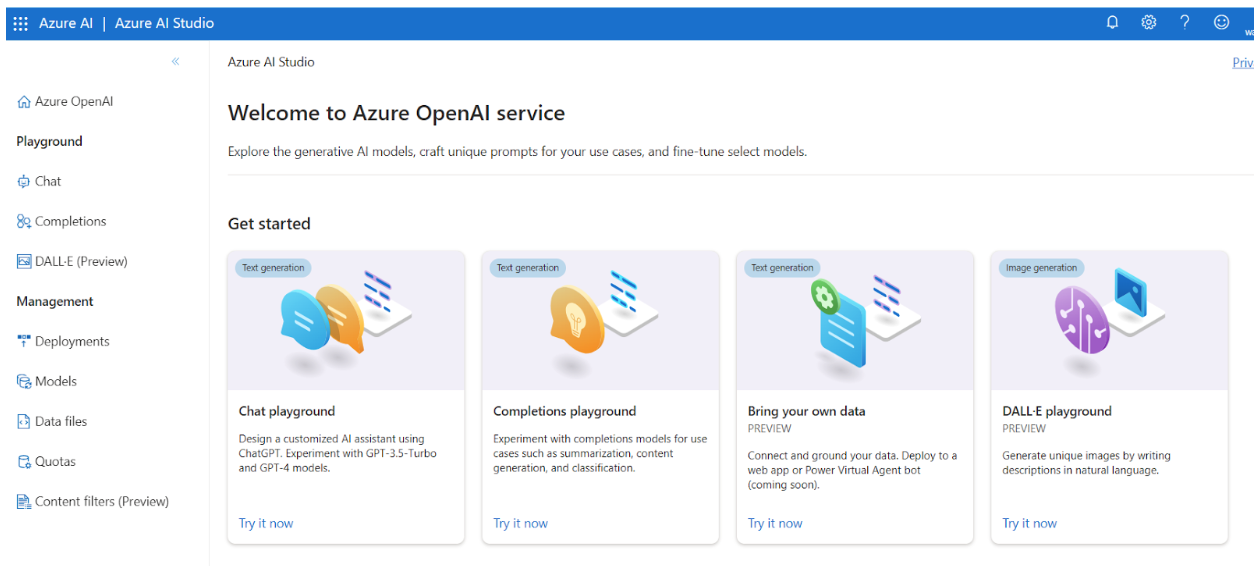

Trying out Large Language Models with Azure OpenAI Service

What is a Language Model?

A Language Model is a machine learning algorithm designed to understand and generate human language. It leverages vast amounts of text data to learn the patterns, syntax, semantics, and context that make up language. This understanding allows language models to perform a wide range of tasks, from answering questions and translating languages to generating creative writing and even code. The Large Language Model (LLM) takes this concept to a new level. It is trained on colossal datasets, making it capable of comprehending context, nuances, and subtleties in text. The ChatGPT models are the prime examples of LLMs that have been fine-tuned to offer practical and innovative applications. And the speed in which the industry is innovating is incredibly fast with OpenAI already offering ChatGPT 4 as its latest more advanced model with even more advanced reasoning capabilities. Now let’s look at how we can try them out with Azure OpenAI service and just a few lines of Python.Interacting with the Azure OpenAI API

The Azure OpenAI API provides a gateway to harness the capabilities of these language models programmatically. Developers can integrate the LLM into their applications, enabling them to perform various language-related tasks without the need for extensive linguistic expertise or having to run their own hardware to host the model and the computation power necessary to generate the responses. Initial Configuration Configuration is extremely simple, we need to set two environment variables:- AZURE_OPENAI_KEY: this is the API key generated by the service to authorize access.

- AZURE_OPENAI_ENDPOINT: this is the URL endpoint that is created when you deploy the service.

import os

import openai

openai.api_key = os.getenv("AZURE_OPENAI_KEY")

openai.api_base = os.getenv("AZURE_OPENAI_ENDPOINT")

openai.api_type = 'azure'

openai.api_version = '2023-05-15'

deployment_name='gpt35turbo' #deployments are like instances of the model that you can refer to by name

# Send a completion call to generate an answer

print('Sending a chat completion request')

response = openai.ChatCompletion.create(

engine=deployment_name,

messages=[

{"role": "system", "content": "Assistant is a large language model trained by OpenAI."},

{"role": "user", "content": "Write a haiku about winter in Canada"}

]

)

print(response)

print(response['choices'][0]['message']['content'])

Prompt engineering is the skill of how to communicate with the LLM to produce a desired output. In this example we can see the way to start the interaction is by sending an array of messages that include a “role” as well as a “content”. In the “role” key we first have “system”, in other words the LLM model and we communicate that we want it to just function in a general purpose way with a general description. In the next message we say the “role” is now the user so this is now a message coming directly from the end user and the “content” contains the request to write us a haiku. If you have used the ChatGPT free chat website then this is the basic code version of that back and forth that you have on OpenAI’s chat site.

The actual text response is in the choices.message.content key, here is the whole JSON response:

{

"id": "chatcmpl-7mOPANqMb3T1MVISntytoCiJePoQu",

"object": "chat.completion",

"created": 1691767912,

"model": "gpt-35-turbo",

"choices": [

{

"index": 0,

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": "Snowflakes softly fall \nA blanket of white covers all \nWinter in Canada"

}

}

],

"usage": {

"completion_tokens": 16,

"prompt_tokens": 32,

"total_tokens": 48

}

} As you can see, the full JSON response gives you some feedback on the LLM’s process like the reason for finishing, the underlying model and the token counts involved. Tokens in particular are something to get a handle on as the models all have limits and costs related to token counts. Once you have this simple scaffolding going, the sky's the limit. Let's look at some common actions that the LLM can facilitate:

General Purpose Text Generation

LLMs can help create new text content from scratch with surprisingly little input. In the example below just a small initial sentence is enough to get it going in the right direction.response = openai.ChatCompletion.create(

engine=deployment_name,

messages=[

{"role": "system", "content": "Assistant is a creative, award winning, science fiction writer"},

{"role": "user", "content": "Complete this paragraph to start a short story: It was the year 2400, great turbulence in the air."}

]

) Response:

The world as we knew it had drastically changed after The Cataclysm that occurred in the year 2250. Cities were rebuilt, new technologies were created, and a totalitarian government had risen to power. I, Juniper Martel, was born into this new world and had never known anything different. That was until I stumbled upon an ancient book that had been hidden for centuries. It contained secrets of the past, including a map to a forbidden land. I knew then that I had to find it, no matter the cost. Some other tasks that can be done include language translation, text summarization, code generation, answering questions and overall carrying a conversation. In this blog post we’re only showing how to get the ball rolling using the general models trained by OpenAI but the most powerful use case is to use your own datasets to train the models. Once again this is another part where Azure governance is extremely important, since you can make sure that your training data and the subsequent model never leave the boundaries of your Azure cloud environment and abide by any geography or privacy regulations in scope. How to train with your own data will be a topic for a future post.

Unleashing the Potential

LLMs open the door to a realm of possibilities in NLP. From generating creative content to automating complex language tasks, LLMs showcase the progress AI has made in understanding and emulating human language. As developers explore and integrate LLMs into their applications, the future of human-machine interaction becomes more exciting and dynamic than ever before. However, for a real-world application, enterprise level security, governance and scale is necessary and that is where Azure OpenAI service truly shines.On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Monitoring Azure Data Factory, Self Hosted IR and Pipelines

Monitoring Azure Data Factory, Self Hosted IR and Pipelines

Sep 15, 2021 12:00:00 AM

8

min read

Unlocking the Power of Personalized AI with Azure OpenAI Service and Cognitive Search

Unlocking the Power of Personalized AI with Azure OpenAI Service and Cognitive Search

Oct 17, 2023 12:24:48 PM

6

min read

How to Fix the “Triggers on Memory-Optimized Tables Must Use WITH NATIVE_COMPILATION” Azure SQL Data Sync Error

How to Fix the “Triggers on Memory-Optimized Tables Must Use WITH NATIVE_COMPILATION” Azure SQL Data Sync Error

Jul 21, 2022 12:00:00 AM

1

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.