Oracle DBMS_CLOUD package with AWS S3

The package DBMS_CLOUD was first introduced in the Autonomous Database to provide a simple way to interact with an object store from the database. It is now available for on-premise databases starting with 19.9c and 21c. It works with OCI, Azure, and AWS and soon should also provide the same functionality for GCP, or at least that is what I’m expecting.

In this blog, we will review how to install the package in the Database and some of the more useful procedures in my opinion. I have chosen to use an AWS S3 bucket to test the package, but the same functionality should work with OCI and Azure.

To begin, we need to install the package. The procedure is documented under note: How To Setup And Use DBMS_CLOUD Package (Doc ID 2748362.1). My environment is a multitenant 19c Database which PSU is 19.15.1 (July 2022).

Setup DBMS_CLOUD

Following the note installation steps, execute the steps.

Execute dbms_cloud_install.sql script using catcon.pl:

oracle@oracle19c dbc]$ $ORACLE_HOME/perl/bin/perl $ORACLE_HOME/rdbms/admin/catcon.pl -u sys/****** --force_pdb_mode 'READ WRITE' -b dbms_cloud_install -d /home/oracle/dbc -l /home/oracle/dbc dbms_cloud_install.sql catcon::set_log_file_base_path: ALL catcon-related output will be written to [/home/oracle/dbc/dbms_cloud_install_catcon_4939.lst] catcon::set_log_file_base_path: catcon: See [/home/oracle/dbc/dbms_cloud_install*.log] files for output generated by scripts catcon::set_log_file_base_path: catcon: See [/home/oracle/dbc/dbms_cloud_install_*.lst] files for spool files, if any catcon.pl: completed successfully

Check if the required objects have been created:

SQL> select con_id, owner, object_name, status, sharing, oracle_maintained from cdb_objects where object_name = 'DBMS_CLOUD' order by con_id;

CON_ID OWNER OBJECT_NAME STATUS SHARING O

---------- -------------------- ------------------------------ ------- ------------------ -

1 PUBLIC DBMS_CLOUD VALID METADATA LINK Y

1 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

1 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

3 PUBLIC DBMS_CLOUD VALID METADATA LINK Y

3 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

3 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

SQL> select owner, object_name, status, sharing, oracle_maintained from dba_objects where object_name = 'DBMS_CLOUD';

OWNER OBJECT_NAME STATUS SHARING O

-------------------- ------------------------------ ------- ------------------ -

PUBLIC DBMS_CLOUD VALID METADATA LINK Y

C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

Now we need to prepare the wallet and certificates to connect to the different public clouds. If your database already has a wallet, you can use it or just create a new one. In the note (Doc ID: 2748362.1) there is a small bash script to import all the certificates from the major Certificate Authorities. You can install all or just the ones you are going to use.

In this case, we are creating a new wallet and we installed all the certificates:

[oracle@oracle19c wallet]$ orapki wallet create -wallet . -pwd **** -auto_login Oracle PKI Tool Release 19.0.0.0.0 - Production Version 19.4.0.0.0 Copyright (c) 2004, 2021, Oracle and/or its affiliates. All rights reserved. Operation is successfully completed. [oracle@oracle19c wallet]$ orapki wallet display -wallet . Oracle PKI Tool Release 19.0.0.0.0 - Production Version 19.4.0.0.0 Copyright (c) 2004, 2021, Oracle and/or its affiliates. All rights reserved. Requested Certificates: User Certificates: Trusted Certificates: Subject: CN=Thawte Timestamping CA,OU=Thawte Certification,O=Thawte,L=Durbanville,ST=Western Cape,C=ZA Subject: CN=Go Daddy Root Certificate Authority - G2,O=GoDaddy.com\, Inc.,L=Scottsdale,ST=Arizona,C=US Subject: CN=Certum CA,O=Unizeto Sp. z o.o.,C=PL Subject: CN=DigiCert Trusted Root G4,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=Entrust Root Certification Authority,OU=(c) 2006 Entrust\, Inc.,OU=www.entrust.net/CPS is incorporated by reference,O=Entrust\, Inc.,C=US Subject: OU=Security Communication RootCA2,O=SECOM Trust Systems CO.\,LTD.,C=JP Subject: OU=Security Communication RootCA1,O=SECOM Trust.net,C=JP Subject: CN=ISRG Root X1,O=Internet Security Research Group,C=US Subject: CN=AddTrust External CA Root,OU=AddTrust External TTP Network,O=AddTrust AB,C=SE Subject: CN=AffirmTrust Premium,O=AffirmTrust,C=US Subject: CN=LuxTrust Global Root,O=LuxTrust s.a.,C=LU Subject: CN=IdenTrust Public Sector Root CA 1,O=IdenTrust,C=US Subject: CN=DigiCert Global Root CA,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=QuoVadis Root CA 1 G3,O=QuoVadis Limited,C=BM Subject: OU=Class 3 Public Primary Certification Authority,O=VeriSign\, Inc.,C=US Subject: CN=Global Chambersign Root - 2008,O=AC Camerfirma S.A.,SERIALNUMBER=A82743287,L=Madrid (see current address at www.camerfirma.com/address),C=EU Subject: CN=DigiCert Assured ID Root CA,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=NetLock Expressz (Class C) Tanúsítványkiadó,OU=Tanúsítványkiadók (Certification Services),O=NetLock Kft.,L=Budapest,C=HU Subject: CN=Class 3P Primary CA,O=Certplus,C=FR Subject: EMAIL=premium-server@thawte.com,CN=Thawte Premium Server CA,OU=Certification Services Division,O=Thawte Consulting cc,L=Cape Town,ST=Western Cape,C=ZA Subject: CN=QuoVadis Root CA 3,O=QuoVadis Limited,C=BM Subject: OU=ePKI Root Certification Authority,O=Chunghwa Telecom Co.\, Ltd.,C=TW Subject: CN=QuoVadis Root CA 2,O=QuoVadis Limited,C=BM Subject: CN=SwissSign Gold CA - G2,O=SwissSign AG,C=CH Subject: CN=Chambers of Commerce Root - 2008,O=AC Camerfirma S.A.,SERIALNUMBER=A82743287,L=Madrid (see current address at www.camerfirma.com/address),C=EU Subject: CN=Certum Trusted Network CA,OU=Certum Certification Authority,O=Unizeto Technologies S.A.,C=PL Subject: CN=AffirmTrust Premium ECC,O=AffirmTrust,C=US Subject: CN=SSL.com RSA SSL subCA,O=SSL Corporation,L=Houston,ST=Texas,C=US Subject: CN=GlobalSign,O=GlobalSign,OU=GlobalSign Root CA - R2 Subject: CN=GlobalSign,O=GlobalSign,OU=GlobalSign ECC Root CA - R4 Subject: CN=GlobalSign,O=GlobalSign,OU=GlobalSign Root CA - R3 Subject: CN=SecureTrust CA,O=SecureTrust Corporation,C=US Subject: CN=Sonera Class2 CA,O=Sonera,C=FI Subject: CN=Starfield Root Certificate Authority - G2,O=Starfield Technologies\, Inc.,L=Scottsdale,ST=Arizona,C=US Subject: CN=UTN-USERFirst-Hardware,OU=http://www.usertrust.com,O=The USERTRUST Network,L=Salt Lake City,ST=UT,C=US Subject: CN=VeriSign Class 3 Public Primary Certification Authority - G5,OU=(c) 2006 VeriSign\, Inc. - For authorized use only,OU=VeriSign Trust Network,O=VeriSign\, Inc.,C=US Subject: CN=VeriSign Class 3 Public Primary Certification Authority - G4,OU=(c) 2007 VeriSign\, Inc. - For authorized use only,OU=VeriSign Trust Network,O=VeriSign\, Inc.,C=US Subject: CN=VeriSign Class 3 Public Primary Certification Authority - G3,OU=(c) 1999 VeriSign\, Inc. - For authorized use only,OU=VeriSign Trust Network,O=VeriSign\, Inc.,C=US Subject: CN=Deutsche Telekom Root CA 2,OU=T-TeleSec Trust Center,O=Deutsche Telekom AG,C=DE Subject: CN=Entrust.net Certification Authority (2048),OU=(c) 1999 Entrust.net Limited,OU=www.entrust.net/CPS_2048 incorp. by ref. (limits liab.),O=Entrust.net Subject: OU=Go Daddy Class 2 Certification Authority,O=The Go Daddy Group\, Inc.,C=US Subject: CN=AAA Certificate Services,O=Comodo CA Limited,L=Salford,ST=Greater Manchester,C=GB Subject: CN=GeoTrust Global CA,O=GeoTrust Inc.,C=US Subject: CN=Entrust Root Certification Authority - EC1,OU=(c) 2012 Entrust\, Inc. - for authorized use only,OU=See www.entrust.net/legal-terms,O=Entrust\, Inc.,C=US Subject: CN=thawte Primary Root CA - G3,OU=(c) 2008 thawte\, Inc. - For authorized use only,OU=Certification Services Division,O=thawte\, Inc.,C=US Subject: CN=thawte Primary Root CA - G2,OU=(c) 2007 thawte\, Inc. - For authorized use only,O=thawte\, Inc.,C=US Subject: CN=D-TRUST Root Class 3 CA 2 2009,O=D-Trust GmbH,C=DE Subject: CN=USERTrust RSA Certification Authority,O=The USERTRUST Network,L=Jersey City,ST=New Jersey,C=US Subject: CN=Baltimore CyberTrust Root,OU=CyberTrust,O=Baltimore,C=IE Subject: CN=thawte Primary Root CA,OU=(c) 2006 thawte\, Inc. - For authorized use only,OU=Certification Services Division,O=thawte\, Inc.,C=US Subject: CN=Swisscom Root CA 2,OU=Digital Certificate Services,O=Swisscom,C=ch Subject: CN=DST Root CA X3,O=Digital Signature Trust Co. Subject: CN=QuoVadis Root CA 3 G3,O=QuoVadis Limited,C=BM Subject: CN=Amazon Root CA 4,O=Amazon,C=US Subject: CN=Amazon Root CA 3,O=Amazon,C=US Subject: CN=Amazon Root CA 2,O=Amazon,C=US Subject: CN=GeoTrust Universal CA,O=GeoTrust Inc.,C=US Subject: CN=Amazon Root CA 1,O=Amazon,C=US Subject: CN=Entrust Root Certification Authority - G2,OU=(c) 2009 Entrust\, Inc. - for authorized use only,OU=See www.entrust.net/legal-terms,O=Entrust\, Inc.,C=US Subject: CN=SwissSign Platinum CA - G2,O=SwissSign AG,C=CH Subject: CN=UTN-USERFirst-Object,OU=http://www.usertrust.com,O=The USERTRUST Network,L=Salt Lake City,ST=UT,C=US Subject: CN=DigiCert High Assurance EV Root CA,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=Actalis Authentication Root CA,O=Actalis S.p.A./03358520967,L=Milan,C=IT Subject: CN=AddTrust Class 1 CA Root,OU=AddTrust TTP Network,O=AddTrust AB,C=SE Subject: CN=Chambers of Commerce Root,OU=http://www.chambersign.org,O=AC Camerfirma SA CIF A82743287,C=EU Subject: CN=GTE CyberTrust Global Root,OU=GTE CyberTrust Solutions\, Inc.,O=GTE Corporation,C=US Subject: CN=DigiCert Global Root G3,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=AffirmTrust Networking,O=AffirmTrust,C=US Subject: CN=SwissSign Silver CA - G2,O=SwissSign AG,C=CH Subject: CN=DigiCert Global Root G2,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: OU=VeriSign Trust Network,OU=(c) 1998 VeriSign\, Inc. - For authorized use only,OU=Class 3 Public Primary Certification Authority - G2,O=VeriSign\, Inc.,C=US Subject: CN=COMODO RSA Certification Authority,O=COMODO CA Limited,L=Salford,ST=Greater Manchester,C=GB Subject: CN=DigiCert Assured ID Root G3,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=DigiCert Assured ID Root G2,OU=www.digicert.com,O=DigiCert Inc,C=US Subject: CN=GlobalSign Root CA,OU=Root CA,O=GlobalSign nv-sa,C=BE Subject: CN=USERTrust ECC Certification Authority,O=The USERTRUST Network,L=Jersey City,ST=New Jersey,C=US Subject: CN=Buypass Class 2 Root CA,O=Buypass AS-983163327,C=NO Subject: CN=XRamp Global Certification Authority,O=XRamp Security Services Inc,OU=www.xrampsecurity.com,C=US Subject: CN=GeoTrust Primary Certification Authority - G3,OU=(c) 2008 GeoTrust Inc. - For authorized use only,O=GeoTrust Inc.,C=US Subject: CN=GeoTrust Primary Certification Authority - G2,OU=(c) 2007 GeoTrust Inc. - For authorized use only,O=GeoTrust Inc.,C=US Subject: CN=SSL.com Root Certification Authority RSA,O=SSL Corporation,L=Houston,ST=Texas,C=US Subject: CN=Buypass Class 3 Root CA,O=Buypass AS-983163327,C=NO Subject: CN=UTN-USERFirst-Client Authentication and Email,OU=http://www.usertrust.com,O=The USERTRUST Network,L=Salt Lake City,ST=UT,C=US Subject: CN=D-TRUST Root Class 3 CA 2 EV 2009,O=D-Trust GmbH,C=DE Subject: CN=QuoVadis Root Certification Authority,OU=Root Certification Authority,O=QuoVadis Limited,C=BM Subject: CN=VeriSign Universal Root Certification Authority,OU=(c) 2008 VeriSign\, Inc. - For authorized use only,OU=VeriSign Trust Network,O=VeriSign\, Inc.,C=US Subject: CN=GlobalSign,O=GlobalSign,OU=GlobalSign ECC Root CA - R5 Subject: OU=Starfield Class 2 Certification Authority,O=Starfield Technologies\, Inc.,C=US Subject: CN=T-TeleSec GlobalRoot Class 3,OU=T-Systems Trust Center,O=T-Systems Enterprise Services GmbH,C=DE Subject: CN=QuoVadis Root CA 2 G3,O=QuoVadis Limited,C=BM Subject: CN=T-TeleSec GlobalRoot Class 2,OU=T-Systems Trust Center,O=T-Systems Enterprise Services GmbH,C=DE Subject: CN=AffirmTrust Commercial,O=AffirmTrust,C=US Subject: OU=VeriSign Trust Network,OU=(c) 1998 VeriSign\, Inc. - For authorized use only,OU=Class 2 Public Primary Certification Authority - G2,O=VeriSign\, Inc.,C=US Subject: CN=TeliaSonera Root CA v1,O=TeliaSonera Subject: CN=NetLock Üzleti (Class B) Tanúsítványkiadó,OU=Tanúsítványkiadók (Certification Services),O=NetLock Kft.,L=Budapest,C=HU Subject: CN=COMODO ECC Certification Authority,O=COMODO CA Limited,L=Salford,ST=Greater Manchester,C=GB Subject: CN=GeoTrust Primary Certification Authority,O=GeoTrust Inc.,C=US Subject: CN=AddTrust Qualified CA Root,OU=AddTrust TTP Network,O=AddTrust AB,C=SE Subject: CN=Class 2 Primary CA,O=Certplus,C=FR Subject: CN=KEYNECTIS ROOT CA,OU=ROOT,O=KEYNECTIS,C=FR Subject: CN=IdenTrust Commercial Root CA 1,O=IdenTrust,C=US Subject: CN=Starfield Services Root Certificate Authority - G2,O=Starfield Technologies\, Inc.,L=Scottsdale,ST=Arizona,C=US [oracle@oracle19c wallet]$

I added the wallet location to the sqlnet.ora file and restarted the listener:

[oracle@oracle19c admin]$ cat sqlnet.ora # sqlnet.ora Network Configuration File: /u01/app/oracle/product/19c/network/admin/sqlnet.ora # Generated by Oracle configuration tools. NAMES.DIRECTORY_PATH= (TNSNAMES, EZCONNECT) WALLET_LOCATION=(SOURCE=(METHOD=FILE)(METHOD_DATA=(DIRECTORY=/u01/app/oracle/admin/wallet)))

Following the steps in the note I executed the following script to create the ACEs:

SQL> @@dbc_aces.sql

Session altered.

old 9: principal_name => upper('&clouduser'),

new 9: principal_name => upper('C##CLOUD$SERVICE'),

Enter value for proxy_host:

old 16: -- host =>'&proxy_host',

new 16: -- host =>'',

Enter value for proxy_low_port:

old 17: -- lower_port => &proxy_low_port,

new 17: -- lower_port => ,

Enter value for proxy_high_port:

old 18: -- upper_port => &proxy_high_port,

new 18: -- upper_port => ,

old 21: -- principal_name => upper('&clouduser'),

new 21: -- principal_name => upper('C##CLOUD$SERVICE'),

old 29: wallet_path => 'file:&sslwalletdir',

new 29: wallet_path => 'file:<Set',

old 32: principal_name => upper('&clouduser'),

new 32: principal_name => upper('C##CLOUD$SERVICE'),

PL/SQL procedure successfully completed.

old 4: execute immediate 'alter database property set ssl_wallet=''&sslwalletdir''';

new 4: execute immediate 'alter database property set ssl_wallet=''<Set''';

Enter value for proxy_uri:

old 8: -- execute immediate 'alter database property set http_proxy=''&proxy_uri''';

new 8: -- execute immediate 'alter database property set http_proxy=''''';

PL/SQL procedure successfully completed.

Session altered.

I created a new user called “CLOUD” under the PDB to test if the package is working as expected. After the user creation I granted the ACL permissions to the user:

SQL> alter session set container =orapdb;

Session altered.

SQL> create user cloud identified by *****;

User created.

SQL> grant create session to cloud;

Grant succeeded.

SQL> grant create table to cloud;

Grant succeeded.

SQL> grant read, write on directory DATA_PUMP_DIR to cloud;

Grant succeeded.

SQL> grant execute on dbms_cloud to cloud;

Grant succeeded.

SQL> @grant_acl.sql

Session altered.

old 9: principal_name => upper('&clouduser'),

new 9: principal_name => upper('CLOUD'),

old 17: -- host =>'&proxy_host',

new 17: -- host =>'<your',

old 18: -- lower_port => &proxy_low_port,

new 18: -- lower_port => <your_proxy_low_port>,

old 19: -- upper_port => &proxy_high_port,

new 19: -- upper_port => <your_proxy_high_port>,

old 22: -- principal_name => upper('&clouduser'),

new 22: -- principal_name => upper('CLOUD'),

old 30: wallet_path => 'file:&sslwalletdir',

new 30: wallet_path => 'file:<Set',

old 33: principal_name => upper('&clouduser'),

new 33: principal_name => upper('CLOUD'),

PL/SQL procedure successfully completed.

Session altered.

SQL>

Now we can test if the package works as expected and is executed by the CLOUD user. Use the testing script provided in the note:

SQL> @test.sql

old 1: CREATE OR REPLACE PROCEDURE &clouduser..GET_PAGE(url IN VARCHAR2) AS

new 1: CREATE OR REPLACE PROCEDURE C##CLOUD$SERVICE.GET_PAGE(url IN VARCHAR2) AS

old 13: wallet_path => 'file:&sslwalletdir',

new 13: wallet_path => 'file:/u01/app/oracle/admin/wallet',

old 14: wallet_password => '&sslwalletpwd');

new 14: wallet_password => 'oracle123');

Procedure created.

old 2: &clouduser..GET_PAGE('https://objectstorage.eu-frankfurt-1.oraclecloud.com');

new 2: C##CLOUD$SERVICE.GET_PAGE('https://objectstorage.eu-frankfurt-1.oraclecloud.com');

valid response

PL/SQL procedure successfully completed.

old 1: drop procedure &clouduser..GET_PAGE

new 1: drop procedure C##CLOUD$SERVICE.GET_PAGE

Procedure dropped.

The functionality is working so we can proceed to test some of its features.

Loading data into the database directly from the S3 bucket

You can find the full reference for the package here: https://docs.oracle.com/en/database/oracle/oracle-database/19/arpls/DBMS_CLOUD.html

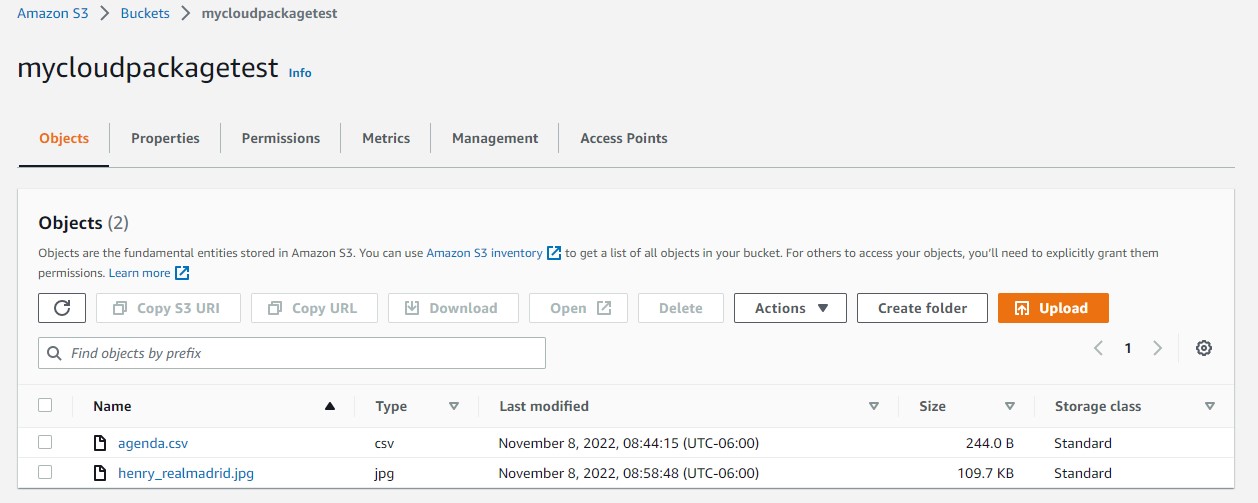

I will begin testing the COPY_DATA Procedure. In this scenario, we have an AWS S3 bucket that is storing CSV files. With the DBMS_CLOUD.COPY_DATA procedure we can use the CSV files in the S3 bucket to populate a table in the Oracle Database. I have already created an S3 bucket in my AWS account and placed a CSV file on it called “agenda.csv”. My bucket name is “mycloudpackagetest”

To make the connection possible we first need to create the credentials to access the S3 bucket. With the DBMS_CLOUD.CREATE_CREDENTIAL procedure we create the required credentials to access AWS. We just need the AWS access key ID as the username and the AWS secret access key as the password:

SET DEFINE OFF

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'AWS_S3_CONN',

username => 'AKIATA5ZEEYIU4OM6ENN',

password => 'aFgJmgqHG2Exja35zYZIkMffjzwYE5bwLQ7BYh+j'

);

END;

/

With the credential created I can test the connection to my bucket using the “LIST_OBJECTS” procedure that will list all the objects in the bucket:

SQL> SELECT * FROM DBMS_CLOUD.LIST_OBJECTS('AWS_S3_CONN', 'https://mycloudpackagetest.s3.amazonaws.com/');

OBJECT_NAME BYTES CHECKSUM CREATED LAST_MODIFIED

----------- ------ --------------------------------- ----------- -------------------------

agenda.csv 134 0f2c18a869ddb5d67082a9214b348139 08-NOV-22 02.21.11.000000 PM +00:00

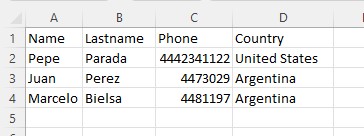

Good, it’s showing the only object under my bucket, the file agenda.csv. This is the content of the file.

I create a table on my PDB with the same columns as the CSV file.

SQL> create table agenda( 2 name varchar(20), 3 lastname varchar(20), 4 phone number, 5 country varchar(20)); Table created.

I execute the procedure to copy the data. Here I need to enter the credential I have already created, the target table name, the format required, and the columns.

SQL> BEGIN

DBMS_CLOUD.COPY_DATA(

table_name =>'AGENDA',

credential_name =>'AWS_S3_CONN',

file_uri_list =>'https://mycloudpackagetest.s3.amazonaws.com/agenda.csv',

format => json_object('type' value 'csv', 'skipheaders' value '1'),

field_list => 'NAME,

LASTNAME,

PHONE,

COUNTRY');

END;

/

PL/SQL procedure successfully completed.

Verify results.

SQL> select * from AGENDA; NAME LASTNAME PHONE COUNTRY -------------------- -------------------- ---------- -------------------- Pepe Parada 4442341122 United States Juan Perez 4473029 Argentina Marcelo Bielsa 4481197 Argentina 3 rows selected.

Incremental load option or external table benefits

What if the CSV file in the AWS Bucket is being updated from time to time? How can we keep the information in the Oracle table up to date?

We can create an external table using the “CREATE_EXTERNAL_TABLE” procedure. The execution is very similar to the COPY_DATA procedure but in this case, we need to list the source and target columns:

SQL> BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name =>'AGENDA_EXT',

credential_name =>'AWS_S3_CONN',

file_uri_list =>'https://mycloudpackagetest.s3.amazonaws.com/agenda.csv',

format => json_object('type' value 'csv', 'skipheaders' value '1'),

field_list => 'NAME,

LASTNAME,

PHONE,

COUNTRY',

column_list => 'NAME VARCHAR(20),

LASTNAME VARCHAR(20),

PHONE NUMBER,

COUNTRY VARCHAR(20)');

END;

/

PL/SQL procedure successfully completed.

Every time we execute a select against the external table, the package will go and check the CSV file in the S3 bucket and read from it. This is the initial query:

SQL> select * from AGENDA_EXT; NAME LASTNAME PHONE COUNTRY -------------------- -------------------- ---------- -------------------- Pepe Parada 4442341122 United States Juan Perez 4473029 Argentina Marcelo Bielsa 4481197 Argentina

I added new lines to the CSV in the S3 bucket. If execute the query again:

SQL> select * from AGENDA_EXT; NAME LASTNAME PHONE COUNTRY -------------------- -------------------- ---------- -------------------- Pepe Parada 4442341122 United States Juan Perez 4473029 Argentina Marcelo Bielsa 4481197 Argentina Thierry Henry 9001239900 France Robin Van Persie 8883213344 Holland Pavel Nevdev 8112231122 Czech Republic 6 rows selected.

The new rows are there. From here we can populate a normal table or just use the data for real-time queries and reports. If you lose connection against the S3 bucket, the data won’t be there anymore.

Let’s check one final procedure. The “PUT_OBJECT” procedure lets us copy an object from our Oracle Database to the S3 bucket. It can be a BLOB or any file within an Oracle Database directory. In this case, I have a picture I want to copy to the S3. The file should be in an Oracle Database directory and the DB user executing the statement should have at least read privileges to the directory:

SQL> grant read on directory DATA_PUMP_DIR to cloud; Grant succeeded. SQL> BEGIN DBMS_CLOUD.PUT_OBJECT ( credential_name =>'AWS_S3_CONN', object_uri =>'https://mycloudpackagetest.s3.amazonaws.com/henry_realmadrid.jpg', directory_name =>'DATA_PUMP_DIR', file_name =>'henry_realmadrid.jpg'); END; / PL/SQL procedure successfully completed. SQL>

We can check the file got copied to the S3 bucket:

SQL> select object_name,created,last_modified,bytes from DBMS_CLOUD.LIST_OBJECTS('AWS_S3_CONN', 'https://mycloudpackagetest.s3.amazonaws.com/');

OBJECT_NAME CREATED LAST_MODIFIED BYTES

---------------------------------------- -------------------- ---------------------------------------- ----------

agenda.csv 08-NOV-22 02.44.15.000000 PM +00:00 244

henry_realmadrid.jpg 08-NOV-22 02.58.48.000000 PM +00:00 112338

To conclude

Again, please check the reference for the package to know about all the functions. These tools are really helpful during these cloud times and open the possibilities for DBAs and Developers to be more productive and create better solutions. Let’s hope Oracle keeps adding more procedures to the package and connections to other public clouds.

Oracle Database Consulting Services

Ready to optimize your Oracle Database for the future?

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Oracle Database 12c Patching: DBMS_QOPATCH, OPATCH_XML_INV, and datapatch

Expand your Oracle Tuning Tools with dbms_utility.expand_sql_text

Issues With Database Service Names Starting With Pluggable Database (PDB) Name

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.