OEM 13c Monitoring Features - Part 1

Overview

To take advantage of OEM's monitoring tools, it's important to set it up according to your needs. In terms of basic monitoring, these are the most important items you'll have to go through to make sure you're properly monitoring your IT environment:- Target groups

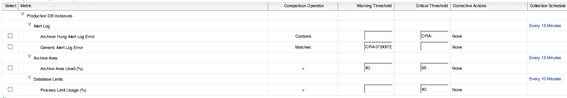

- Metrics and thresholds

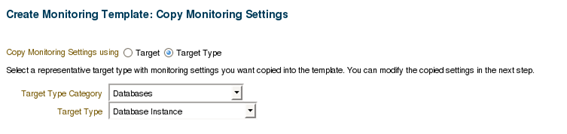

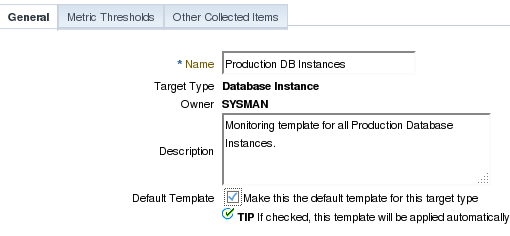

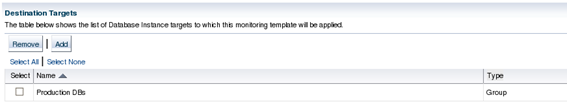

- Monitoring templates

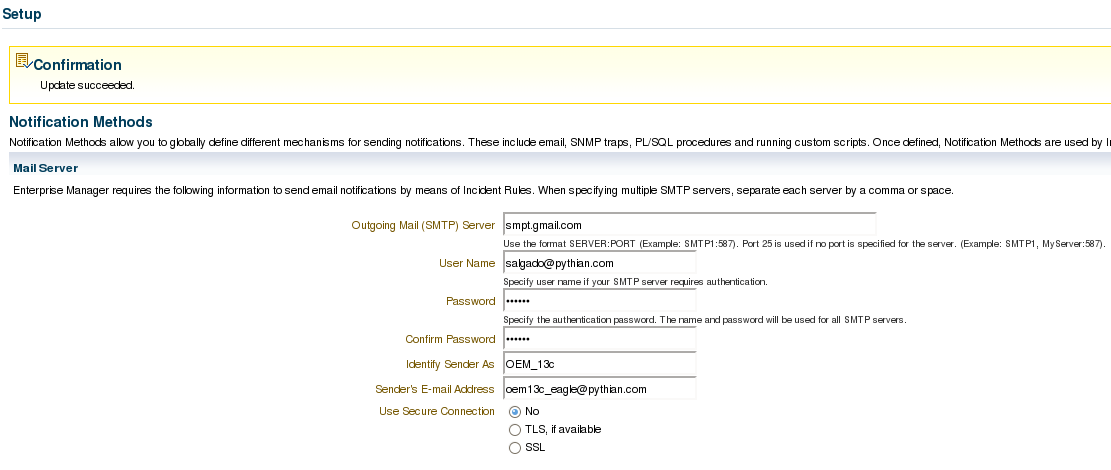

- Notification methods

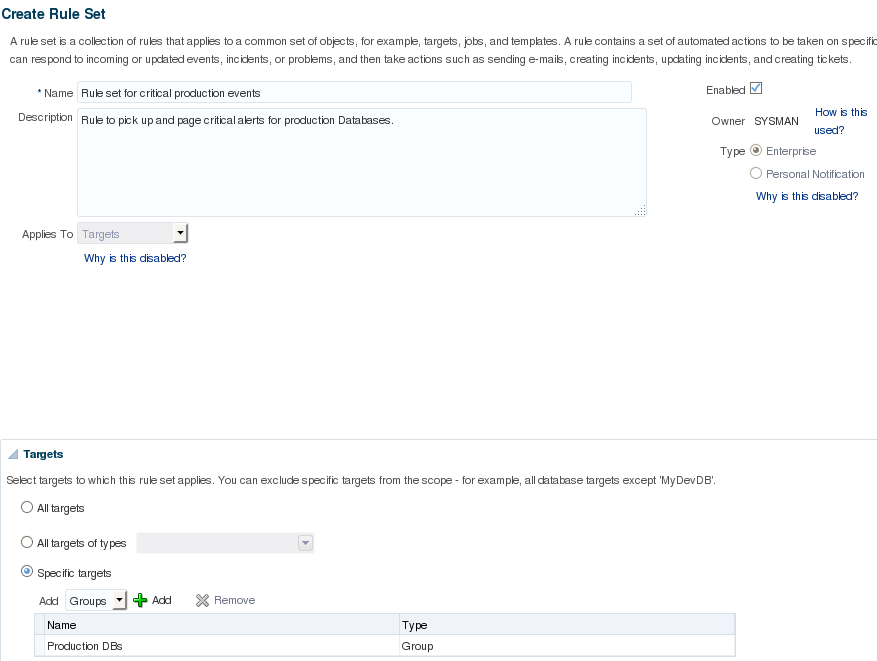

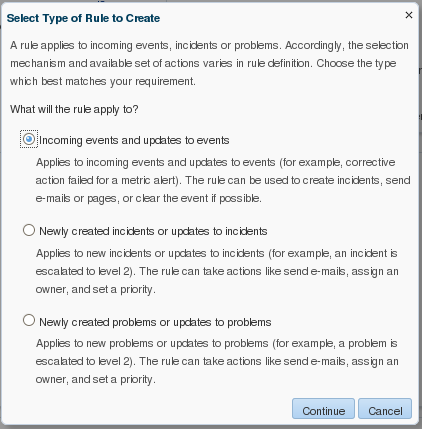

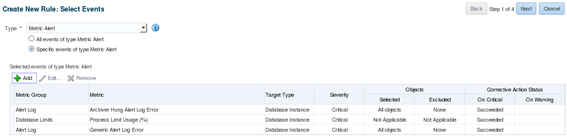

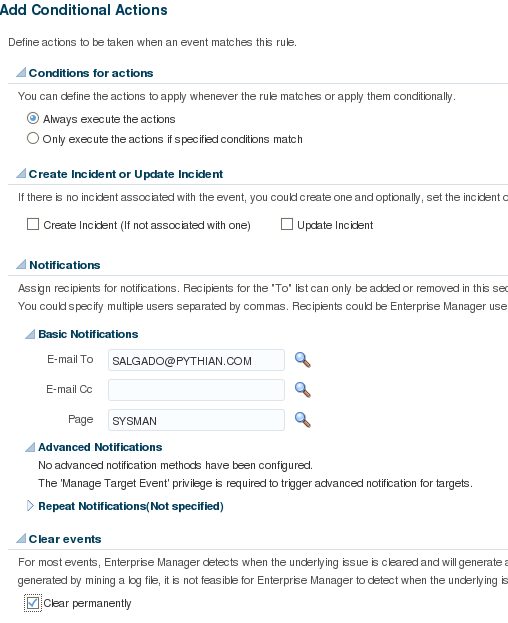

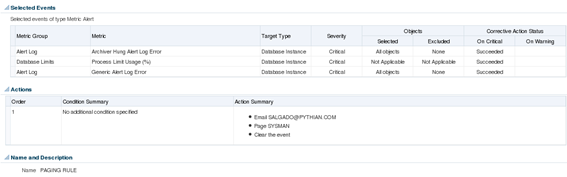

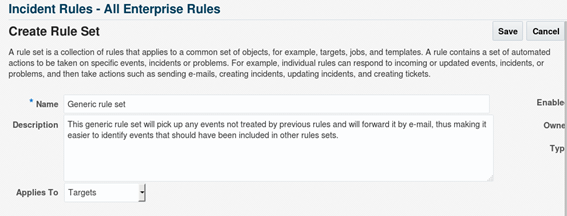

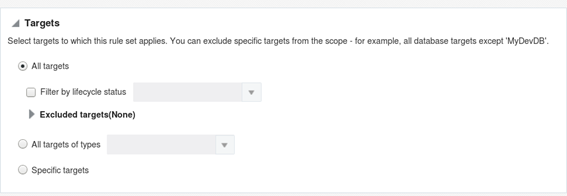

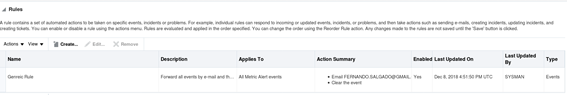

- Incident rules

Basic monitoring features

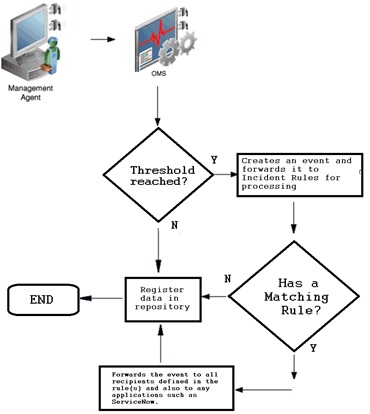

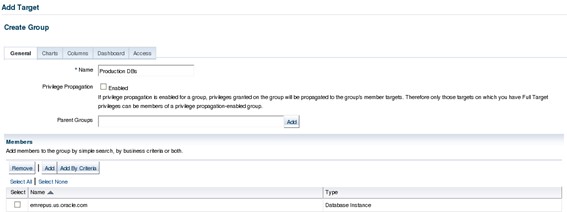

To send proper notification, OEM uses metrics thresholds and incident rules. To properly monitor the IT environment with OEM, it's important to carefully select which metrics should be collected and on which targets. The best way to achieve this is by setting up different target groups and monitoring templates. Depending on the needs of each target, there's usually a group for production targets: one for development, and so on. But groups may be created based on other criteria such as database sizes, serviced applications, etc. Groups can be created on "Targets -> Groups" page:

On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

OEM 13c Monitoring Features – Part 3, Reports Based on Metric Data

![]()

OEM 13c Monitoring Features – Part 3, Reports Based on Metric Data

Jan 16, 2020 12:00:00 AM

2

min read

OEM 13c Monitoring Features - Part 4, Corrective Actions

![]()

OEM 13c Monitoring Features - Part 4, Corrective Actions

Jan 21, 2020 12:00:00 AM

8

min read

OEM 13c Monitoring Features - Part 5, Best Practices and Automatic Incident Creation

![]()

OEM 13c Monitoring Features - Part 5, Best Practices and Automatic Incident Creation

Feb 4, 2020 12:00:00 AM

11

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.