Backup strategies in Cassandra

- Errors made in data updates by client applications

- Accidental deletions

- Catastrophic failures that require the entire cluster to be rebuilt

- Data corruption

- A desire to rollback cluster to a previous known good state

Setting up a backup strategy

When setting up your backup strategy, you should consider some points:- Secondary storage footprint: Backup footprints can be much larger than the live database setup depending on the frequency of backups and retention period. It is therefore vital to create an efficient storage solution that decreases storage CAPEX (capital expenditure) as much as possible.

- Recovery point objective (RPO): The maximum targeted period in which data might be lost from service due to a significant incident.

- Recovery time objective (RTO): The targeted duration of time and a Service Level Agreement within which a backup must be restored after a disaster/disruption to avoid unacceptable consequences associated with a break in business continuity.

- Backup performance: The backup performance should be sufficient enough to at least match the data change rate in the Cassandra Cluster.

Backup alternatives

Snapshot-based backups

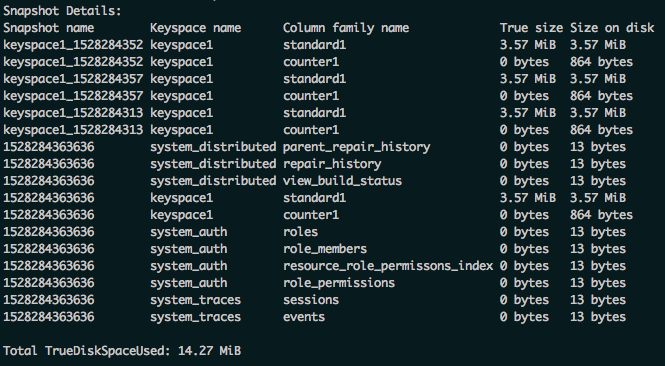

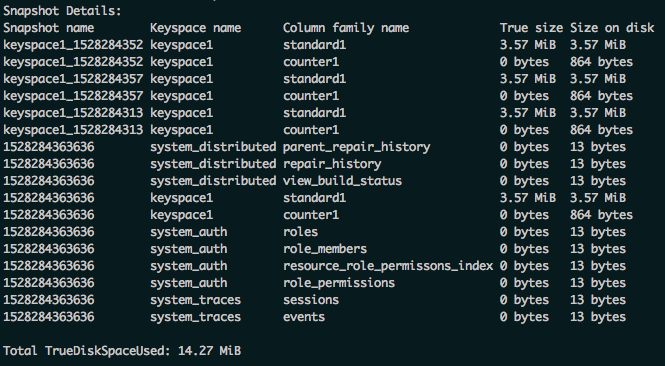

The purpose of a snapshot is to make a copy of all or part of keyspaces and tables in a node and to save it into a separate file. When you take a snapshot, Cassandra first performs a flush to push any data residing in the memtables into the disk (SStables), and then makes a hard link to each SSTable file. Each snapshot contains a manifest.json file that lists the SSTable files included in the snapshot to make sure that the entire contents of the snapshot are present. Nodetool snapshot operates at the node level, meaning that you will need to run it at the same time on multiple nodes.Incremental backups

When incremental backups are enabled, Cassandra creates backups as part of the process of flushing SSTables to disk. The backup consists of a hard link to each data file that is stored in a backup directory. In Cassandra, incremental backups contain only new SStables files, making them dependent on the last snapshot created. Files created due to compaction are not hard linked.Incremental backups in combination with snapshot

By combining both methods, you can achieve a better granularity of the backups. Data is backed up periodically via the snapshot, and incremental backup files are used to obtain granularity between scheduled snapshots.Commit log backup in combination with snapshot

This approach is a similar method to the incremental backup with snapshots. Rather than relying on incremental backups to backup newly added SStables, commit logs are archived. As with the previous solution, snapshots provide the bulk of backup data, while the archive of commit log is used for point-in-time backup.Commit log backup in combination with snapshot and incremental

In addition to incremental backups, commit logs are archived. This process relies on a feature called Commitlog Archiving. Like with the previous solution, snapshots provide the bulk of backup data, incremental complement and the archive of commit log used for point-in-time backup. Due to the nature of commit logs, it is not possible to restore commit logs to a different node other than the one it was backed up from. This limitation restricts the scope of restoring commit logs in case of catastrophic hardware failure. (And a node is not fully restored, only its data.)Datacenter backup

With this setup, Cassandra will stream data to the backup as it is added. This mechanism prevents cumbersome snapshot-based backups requiring files stored on a network. However, this will not protect from a developer mistake (e.g., deletion of data), unless there is a time buffer between both data centers.Backup options comparison

| TYPE | ADVANTAGES | DISADVANTAGES |

|---|---|---|

| Snapshot-based backups | Simple to manage: Requires simple scheduled snapshot command to run on each of the nodes. Cassandra nodetool utility provides the clearsnapshot command that removes the snapshot files. (Auto snapshots on table drops are not visible to this command.) | Potential large RPO: Snapshots require flushing all in-memory data to disk; therefore, frequent snapshot calls will impact the cluster’s performance. Storage Footprint: Depending on various factors -- such as workload type, compaction strategy, or versioning interval -- compaction may cause multi-fold data to be backed up, causing an increase in Capital Expenditure (CapEx). Snapshot storage management overhead: Cassandra admins are expected to remove the snapshot files to a safe location such as AWS S3. |

| Incremental Backups | Better storage utilization: There are no duplicate records in backup, as compacted files are not backed up. Point-in-time backup: companies can achieve better RPO, as backing up from the incremental backup folder is a continuous process. | Space management overhead: The incremental backup folders must be emptied after being backed up. Failure to do so may cause severe space issues on the cluster. Spread across many files: Since incremental backups create files every time a flush occurs, it typically produces many small files, making file management and recovery not easy tasks and that can have an impact on RTO and the Service Level. |

| Incremental Backups in Combination with Snapshots | Large backup files: Only data between snapshots are from the incremental backups. Point-in-time: It provides point-in-time backup and restores. | Space management overhead: Every time a snapshot is backed up, data needs to be cleaned up. Operationally burdensome: Requires DBAs to script solutions. |

| CommitLog Backup in Combination with Snapshot and Incremental | Point in time: It provides the best point in time backup and restores. | Space management overhead: Every time a snapshot backed-up data needs to be cleaned up, it increases Operational Expenditure (OpEx.) Restore Complexity: Restore is more complicated as part of the restore will happen from the commit log replay. Storage overhead: Snapshot-based backup will provide storage overhead because of duplication of data due to compaction, resulting in higher CapEx expenditure. Highly complex: Due to the nature of dealing with three times the backups, plus the streaming and managing of the commit log, it is a highly sophisticated backup solution. |

| Datacenter Backup | Hot Backup: It can provide a swift way to restore data. Space management: Using RF = 1, you can avoid data replication | Additional Datacenter: Since it requires a new datacenter to be built, it needs higher CapEx as well as OpEx. Prone to Developer Mistakes: Will not protect from developer mistakes (unless there is a time buffer, as mentioned above). |

On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Cassandra backups using nodetool

Cassandra backups using nodetool

Jun 11, 2018 12:00:00 AM

4

min read

AWS Redshift cluster sizing

![]()

AWS Redshift cluster sizing

Dec 3, 2015 12:00:00 AM

3

min read

Backup Oracle E-Business Suite Running on AWS EC2

Backup Oracle E-Business Suite Running on AWS EC2

Jun 21, 2023 12:00:00 AM

5

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.