Getting started with Orchestrator

Prep work

1. Create a MySQL user on each of your database servers. Orchestrator will connect with this user to discover the topology and to perform any changes you tell it to make.CREATE USER 'orchestrator'@'%' IDENTIFIED BY '****';

GRANT SUPER, PROCESS, REPLICATION SLAVE, RELOAD ON *.* TO 'orchestrator'@'%';

GRANT SELECT ON mysql.slave_master_info TO 'orchestrator'@'%';

GRANT SELECT ON meta.* TO 'orchestrator'@'%';

Note: Orchestrator reads replication credentials stored in mysql.slave_master_info table, which implies you need to set up your servers with master_info_repository = 'TABLE' option if you want Orchestrator to be able to make topology changes on your behalf. 2. (Optional) Create a table to store the cluster name on each of your database servers. The idea is that if you set this up on each of your database servers, Orchestrator will pick up the cluster name automatically. While you could skip this step and just rename the clusters via the GUI later, it is a nice to have.

CREATE DATABASE meta;

CREATE TABLE meta.cluster (

anchor TINYINT NOT NULL,

cluster_name VARCHAR(128) CHARSET ascii NOT NULL DEFAULT '',

cluster_domain VARCHAR(128) CHARSET ascii NOT NULL DEFAULT '',

PRIMARY KEY (anchor)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO meta.cluster (anchor, cluster_name, cluster_domain) VALUES (1, 'testcluster', 'example.com');

Installation steps

1. Get the prerequisites. Orchestrator client requires jq package, which is available from epel repo, so let's install that first:sudo yum install epel-release

sudo yum install jq

2. Install Orchestrator. Orchestrator is readily available as .deb or .rpm package so let's use that:

sudo yum install https://github.com/github/orchestrator/releases/download/v3.0.11/orchestrator-3.0.11-1.x86_64.rpm

3. Prepare the configuration file. Let's start by copying the sample config file:

cp /usr/local/orchestrator/orchestrator-sample.conf.json /etc/orchestrator.conf.json

There are a lot of settings we can tweak, but to get started, we need to config the following values:

- "MySQLTopologyUser"

- "MySQLTopologyPassword"

- "DetectClusterAliasQuery"

- "BackendDB"

- "SQLite3DataFile"

{

"Debug": true,

"EnableSyslog": false,

"ListenAddress": ":3000",

"MySQLTopologyUser": "orchestrator",

"MySQLTopologyPassword": "****",

"MySQLTopologyCredentialsConfigFile": "",

"MySQLTopologySSLPrivateKeyFile": "",

"MySQLTopologySSLCertFile": "",

"MySQLTopologySSLCAFile": "",

"MySQLTopologySSLSkipVerify": true,

"MySQLTopologyUseMutualTLS": false,

"MySQLOrchestratorHost": "127.0.0.1",

"MySQLOrchestratorPort": 3306,

"MySQLOrchestratorDatabase": "orchestrator",

"MySQLOrchestratorUser": "orc_server_user",

"MySQLOrchestratorPassword": "orc_server_password",

"MySQLOrchestratorCredentialsConfigFile": "",

"MySQLOrchestratorSSLPrivateKeyFile": "",

"MySQLOrchestratorSSLCertFile": "",

"MySQLOrchestratorSSLCAFile": "",

"MySQLOrchestratorSSLSkipVerify": true,

"MySQLOrchestratorUseMutualTLS": false,

"MySQLConnectTimeoutSeconds": 1,

"DefaultInstancePort": 3306,

"DiscoverByShowSlaveHosts": true,

"InstancePollSeconds": 5,

"UnseenInstanceForgetHours": 240,

"SnapshotTopologiesIntervalHours": 0,

"InstanceBulkOperationsWaitTimeoutSeconds": 10,

"HostnameResolveMethod": "default",

"MySQLHostnameResolveMethod": "@@hostname",

"SkipBinlogServerUnresolveCheck": true,

"ExpiryHostnameResolvesMinutes": 60,

"RejectHostnameResolvePattern": "",

"ReasonableReplicationLagSeconds": 10,

"ProblemIgnoreHostnameFilters": [],

"VerifyReplicationFilters": false,

"ReasonableMaintenanceReplicationLagSeconds": 20,

"CandidateInstanceExpireMinutes": 60,

"AuditLogFile": "",

"AuditToSyslog": false,

"RemoveTextFromHostnameDisplay": ".mydomain.com:3306",

"ReadOnly": false,

"AuthenticationMethod": "",

"HTTPAuthUser": "",

"HTTPAuthPassword": "",

"AuthUserHeader": "",

"PowerAuthUsers": [

"*"

],

"ClusterNameToAlias": {

"127.0.0.1": "test suite"

},

"SlaveLagQuery": "",

"DetectClusterAliasQuery": "SELECT ifnull(max(cluster_name), '''') as cluster_alias from meta.cluster where anchor=1;",

"DetectClusterDomainQuery": "",

"DetectInstanceAliasQuery": "",

"DetectPromotionRuleQuery": "",

"DataCenterPattern": "[.]([^.]+)[.][^.]+[.]mydomain[.]com",

"PhysicalEnvironmentPattern": "[.]([^.]+[.][^.]+)[.]mydomain[.]com",

"PromotionIgnoreHostnameFilters": [],

"DetectSemiSyncEnforcedQuery": "",

"ServeAgentsHttp": false,

"AgentsServerPort": ":3001",

"AgentsUseSSL": false,

"AgentsUseMutualTLS": false,

"AgentSSLSkipVerify": false,

"AgentSSLPrivateKeyFile": "",

"AgentSSLCertFile": "",

"AgentSSLCAFile": "",

"AgentSSLValidOUs": [],

"UseSSL": false,

"UseMutualTLS": false,

"SSLSkipVerify": false,

"SSLPrivateKeyFile": "",

"SSLCertFile": "",

"SSLCAFile": "",

"SSLValidOUs": [],

"URLPrefix": "",

"StatusEndpoint": "/api/status",

"StatusSimpleHealth": true,

"StatusOUVerify": false,

"AgentPollMinutes": 60,

"UnseenAgentForgetHours": 6,

"StaleSeedFailMinutes": 60,

"SeedAcceptableBytesDiff": 8192,

"PseudoGTIDPattern": "",

"PseudoGTIDPatternIsFixedSubstring": false,

"PseudoGTIDMonotonicHint": "asc:",

"DetectPseudoGTIDQuery": "",

"BinlogEventsChunkSize": 10000,

"SkipBinlogEventsContaining": [],

"ReduceReplicationAnalysisCount": true,

"FailureDetectionPeriodBlockMinutes": 60,

"RecoveryPollSeconds": 10,

"RecoveryPeriodBlockSeconds": 3600,

"RecoveryIgnoreHostnameFilters": [],

"RecoverMasterClusterFilters": [

".*"

],

"RecoverIntermediateMasterClusterFilters": [

"_intermediate_master_pattern_"

],

"OnFailureDetectionProcesses": [

"echo 'Detected {failureType} on {failureCluster}. Affected replicas: {countSlaves}' >> /tmp/recovery.log"

],

"PreFailoverProcesses": [

"echo 'Will recover from {failureType} on {failureCluster}' >> /tmp/recovery.log"

],

"PostFailoverProcesses": [

"echo '(for all types) Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"PostUnsuccessfulFailoverProcesses": [],

"PostMasterFailoverProcesses": [

"echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Promoted: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"PostIntermediateMasterFailoverProcesses": [

"echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"CoMasterRecoveryMustPromoteOtherCoMaster": true,

"DetachLostSlavesAfterMasterFailover": true,

"ApplyMySQLPromotionAfterMasterFailover": true,

"MasterFailoverDetachSlaveMasterHost": false,

"MasterFailoverLostInstancesDowntimeMinutes": 0,

"PostponeSlaveRecoveryOnLagMinutes": 0,

"OSCIgnoreHostnameFilters": [],

"GraphiteAddr": "",

"GraphitePath": "",

"GraphiteConvertHostnameDotsToUnderscores": true,

"BackendDB": "sqlite",

"SQLite3DataFile": "/usr/local/orchestrator/orchestrator.db"

}

4. Prepare systemd script. At the time of this writing, a systemd script to manage start/stop of the service is not included. I set that up as follows:

vi /etc/systemd/system/orchestrator.service

[Unit]

Description="orchestrator: MySQL replication management and visualization"

After=syslog.target network.target

[Service]

Type=simple

ExecStart=/usr/local/orchestrator/orchestrator --verbose http

PIDFile=/var/run/orchestrator.pid

WorkingDirectory=/usr/local/orchestrator

[Install]

WantedBy=multi-user.target

5. Reload the service so it reads the updated configuration file /etc/orchestrator.conf.json.

service orchestrator reload That's it for the installation steps.

Using the GUI

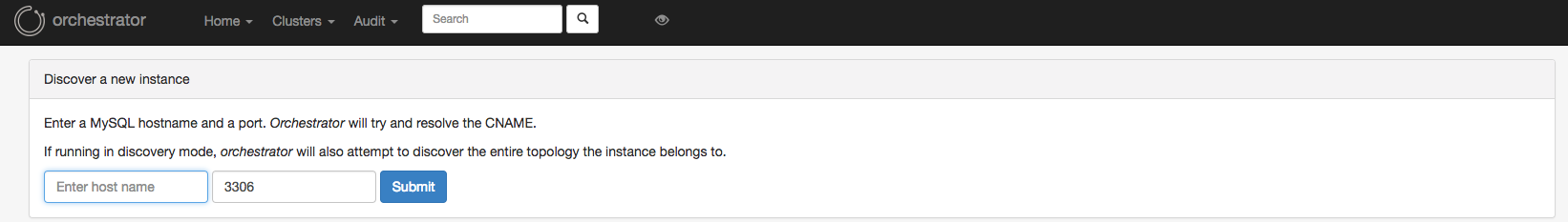

The first step is pointing Orchestrator to a starting host. It will then automatically discover any other hosts that are members of the same cluster by crawling up and down the replication chain. 1. Using a browser, open the GUI (by default port 3000) on the host where you installed Orchestrator: https://192.168.56.100:3000/ 2. Click '

Discover' on the top bar, enter the ip address and port of any host you want, and hit the

Submit button.

2. Click '

Discover' on the top bar, enter the ip address and port of any host you want, and hit the

Submit button.

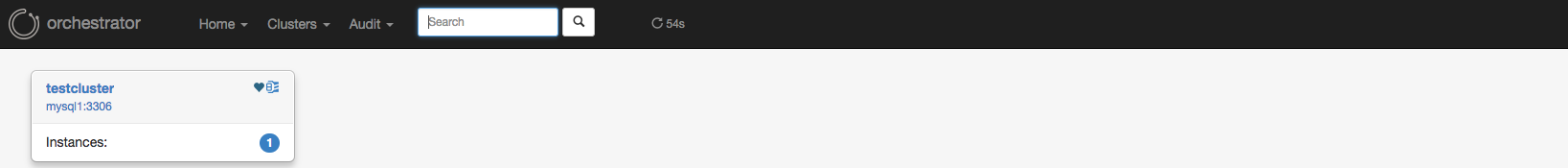

3. Click on

Clusters ->

Dashboard. After a few seconds, you should see the cluster being shown (refresh the page if needed).

3. Click on

Clusters ->

Dashboard. After a few seconds, you should see the cluster being shown (refresh the page if needed).

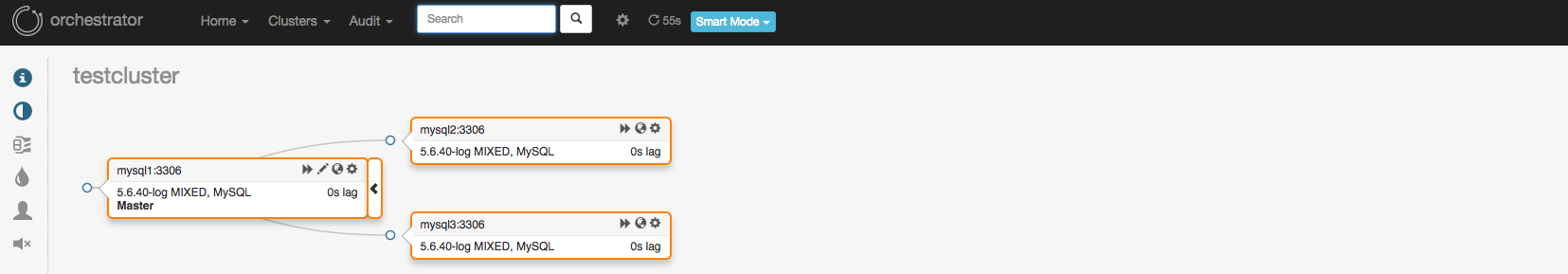

4. Now click on the cluster name and you should see the details about all current members of the replication topology.

4. Now click on the cluster name and you should see the details about all current members of the replication topology.

The cluster should have automatically been named according to what is specified in meta.cluster table. You can also use the GUI to drag & drop to perform topology changes, provided you are using GTID or pseudo-GTID (which is a topic for a different post).

The cluster should have automatically been named according to what is specified in meta.cluster table. You can also use the GUI to drag & drop to perform topology changes, provided you are using GTID or pseudo-GTID (which is a topic for a different post).

Closing thoughts

Orchestrator is rapidly becoming the go-to topology management tool in the MySQL world. I encourage you to start playing with it as soon as possible. If you are interested in learning more, I suggest you also check out the following posts in the Pythian blog. Happy Orchestrating! https://blog.pythian.com/mysql-high-availability-with-haproxy-consul-and-orchestrator/ https://blog.pythian.com/graceful-master-switchover-proxysql-orchestrator/Share this

Previous story

← Pythian BORG: bringing on recruited gurusNext story

22 Ways to Make Remote Work, Work! →You May Also Like

These Related Stories

The Pythian Talent Community

![]()

The Pythian Talent Community

Apr 23, 2021 12:00:00 AM

3

min read

Adaptive Log File Sync: Oracle, Please Don't Do That Again

![]()

Adaptive Log File Sync: Oracle, Please Don't Do That Again

Oct 19, 2012 12:00:00 AM

8

min read

8 Questions To Ask When Choosing A Database Managed Service Provider

8 Questions To Ask When Choosing A Database Managed Service Provider

Jul 6, 2020 12:00:00 AM

3

min read

No Comments Yet

Let us know what you think