Neo4j cluster using Vagrant, Ansible and Virtualbox

Recently, I was faced with the need to create and configure a Neo4j cluster. In order to be able to easily experiment with different settings and configurations, I decided I needed to create a local to Neo4j cluster using Vagrant, Ansible and Virtualbox. This post explains the process step-by-step. As you will see, it is not that difficult :)

Neo4j is a graph database management system developed by Neo4j, Inc. Described by its developers as an ACID-compliant transactional database with native graph storage and processing, Neo4j is the most popular graph database according to

DB-Engines ranking. Neo4j comes in two editions: community and enterprise. It is dual-licensed: GPLv3; and AGPLv3 / commercial. The community edition is free but is limited to running on one node only due to the lack of clustering and is without hot backups. The enterprise edition unlocks these limitations, allowing for clustering, hot backups and monitoring. Vagrant is an open-source software product for building and maintaining portable virtual software development environments, e.g. for VirtualBox, Hyper-V, Docker containers, VMware and AWS which try to simplify software configuration management of virtualizations in order to increase development productivity. Vagrant is written in the Ruby language, but its ecosystem supports development in few languages. Ansible is software that automates software provisioning, configuration management and application deployment. It is one of the most popular

"configuration as code" tools.

Neo4j is a graph database management system developed by Neo4j, Inc. Described by its developers as an ACID-compliant transactional database with native graph storage and processing, Neo4j is the most popular graph database according to

DB-Engines ranking. Neo4j comes in two editions: community and enterprise. It is dual-licensed: GPLv3; and AGPLv3 / commercial. The community edition is free but is limited to running on one node only due to the lack of clustering and is without hot backups. The enterprise edition unlocks these limitations, allowing for clustering, hot backups and monitoring. Vagrant is an open-source software product for building and maintaining portable virtual software development environments, e.g. for VirtualBox, Hyper-V, Docker containers, VMware and AWS which try to simplify software configuration management of virtualizations in order to increase development productivity. Vagrant is written in the Ruby language, but its ecosystem supports development in few languages. Ansible is software that automates software provisioning, configuration management and application deployment. It is one of the most popular

"configuration as code" tools.

Prerequisites

Before you start, you need the following software installed in your machine: Please note: You can find all the code described below in the GitHub repo of this post.Start with Vagrant

Boxes are the package format for Vagrant environments. A box can be used by anyone on any platform that Vagrant supports to bring up an identical working environment. The easiest way to use a box is to add a box from the publicly available catalogue of Vagrant boxes. You can also add and share your own customized boxes on this website. In order to start, you create an empty folder, cd into it and run [code]vagrant init ubuntu/trusty64[/code] This command will create a file calledVagrantfile in your folder. Once this is in place, you can run

vagrant up which will create and start a virtual machine based on the

ubuntu/trusty64 image. Finally, once the VM is up, you can run

vagrant ssh to ssh into it and run Linux commands. Other useful commands are

vagrant halt which shuts down the running machine that Vagrant is managing and

vagrant destroy which will remove all traces of the guest machine from your system.

Create a configuration file

This is a good time to create a central configuration file. We will use this file to define parameters which will be read from both Vagrant and Ansible (more on this later on). We will modify Vagrantfile, which is a Ruby script underneath in reality to read a YAML configuration file which we will callvagrant.yml. First, we create the file

vagrant.yml in the same folder as the Vagrantfile and insert into it the following contents: [code] --- vm_box: ubuntu/trusty64 vm_name: neo4j-node vm_memory: 4096 vm_gui: false [/code] Bear in mind that for this file (being YAML) indentation is important. Next, we modify our

Vagrantfile. We add the following lines at the top: [code language="ruby"] require 'yaml' settings = YAML.load_file 'vagrant.yml' [/code] These lines import the required Ruby module and load our

vagrant.yml file into a variable called settings. Now we can reference the configuration variables. For example, we can change the line: [code language="ruby"] config.vm.box = "ubuntu/trusty64" [/code] to [code language="ruby"] config.vm.box = settings['vm_box'] [/code] This will allow us to be able to change the box later should we want to test our setup on a different operating system or version. At this point, we can also control other settings. If we are using Virtualbox, we can find the

config.vm.provider "virtualbox" section of our Vagrantfile, uncomment it and modify it as follows: [code language="ruby"] config.vm.provider "virtualbox" do |vb| # Display the VirtualBox GUI when booting the machine vb.gui = settings['vm_gui'] # Customize the amount of memory on the VM: vb.memory = settings['vm_memory'] # Customize the name of the VM: vb.name = settings['vm_name'] end [/code] This allows us to control several settings of our Virtualbox VM (such as the name, the memory etc) based on the values we define in our

vagrant.yml file. Now that we have a configuration file, we are ready to proceed to the next step.

Defining multiple machines

Multiple machines are defined within the same project Vagrantfile using theconfig.vm.define method call. We want the number of nodes to be configurable, so we first add the following line to our

vagrant.yml configuration file: [code language="ruby"] cluster_size: 3 [/code] Now we have to edit our Vagrantfile in order to introduce a loop. The way to do this is to find the line containing: [code language="ruby"] Vagrant.configure("2") do |config| [/code] and insert the following lines after it: [code language="ruby"] # Loop with node_number taking values from 1 to the configured cluster size (1..settings['cluster_size']).each do |node_number| # Define node_name by appending the node number to the configured vm_name node_name = settings['vm_name'] + "#{node_number}" # Define settings for each node config.vm.define node_name do |node| [/code] For the rest of the Vagrantfile we have to:

- Replace references to

configwithnode - Replace

settings['vm_name']withnode_name - Insert two end statements at the end of the file

vagrant up three machines will be created in Virtualbox:

neo4j-node1,

neo4j-node2 and

neo4j-node3.

Networking

Vagrant private networks allow you to access your guest machine by an address that is not publicly accessible from the global internet. In general, this means your machine gets an address in the private address space. Multiple machines within the same private network (also usually with the restriction that they're backed by the same provider) can communicate with each other on private networks. We need to specify static IP addresses for our machines and make sure that the static IPs do not collide with any other machines on the same network. To do this, we first add the following line to ourvagrant.yml configuration file: [code] vm_ip_prefix: 192.168.3 [/code] The last part of the IP is going to be determined for each node by simply adding 10 to the node number. So we add the following lines to our Vagrantfile script: [code language="ruby"] # Determine node_ip based on the configured vm_ip_prefix node_ip = settings['vm_ip_prefix']+"."+"#{node_number+10}" # Create a private network, which allows access to the machine using node_ip node.vm.network "private_network", ip: node_ip [/code] Now after we run

vagrant up, we can test network connectivity. We should be able to run

ping 192.168.3.11 ,

ping 192.168.3.12 ,

ping 192.168.3.13 from our host and get a response. We should also be able to run the same commands from within each node and get a response.

Provisioning Java and Neo4j with Ansible

So far, we have focused on creating a cluster of virtual machines. At this point, the machines we have created are barebones; only the operating system has been installed and the network between them (and our host) has been configured. However, we need a way to install other software on them. This is where Ansible comes in handy. Ansible is a configuration management and orchestration tool, similar to Chef, Puppet or Salt. You only need to install Ansible on the control server or node. It communicates and performs the required tasks using SSH. No other installation is required. This is different from other orchestration tools like Chef and Puppet where you have to install software both on the control and client nodes. Ansible uses configuration files called playbooks to define a series of tasks to be executed. The playbooks are written in YAML syntax. Ansible also uses the concept of “roles” to allow Ansible code to be organized in multiple nested folders to make the code modular. Simply put, roles are folders containing Ansible code organized with specific conventions that achieves one specific goal (eg. to install Java). Organizing things into roles allows you to reuse common configuration steps. In most cases, you can search for a role developed by someone else, download it and use it as is or with minor modifications to suit your specific needs. One final advantage of Ansible is that it is fully supported by Vagrant. In fact, Vagrant has two different provisioners for Ansible: “Ansible (remote) Provisioner” and “Ansible Local Provisioner”. The Vagrant Ansible provisioner allows you to provision the guest using Ansible playbooks by executing ansible-playbook from the Vagrant host. We will use this because we don’t want Ansible to be installed on our guest machines. Of course, we need to have Ansible installed on our host machine. So the first step is to add the following code to our Vagrantfile script: [code language="ruby"] # Ansible provisioning # Disable the new default behavior introduced in Vagrant 1.7, to # ensure that all Vagrant machines will use the same SSH key pair. # See https://github.com/mitchellh/vagrant/issues/5005 node.ssh.insert_key = false # Determine neo4j_initial_hosts initial_node_ip = settings['vm_ip_prefix']+"."+"11" host_coordination_port = settings['neo4j_host_coordination_port'].to_s neo4j_initial_hosts = initial_node_ip + ":" + host_coordination_port # Call Ansible also passing it values needed for configuration node.vm.provision "ansible" do |ansible| ansible.verbose = "v" ansible.playbook = "playbook.yml" ansible.extra_vars = { node_ip_address: node_ip, neo4j_server_id: node_number, neo4j_initial_hosts: neo4j_initial_hosts } end [/code] The above code does three things:- Configures some ssh settings required for compatibility

- Prepares an extra variable that will be needed for Neo4j cluster configuration (

neo4j_initial_hosts) - Calls Vagrant’s Ansible Provisioner passing it the name of the playbook to execute (

playbook.yml) and any variables that are known at this time and will be needed later by Ansible. For example, the Vagrant variablenode_ipis passed to the Ansible variablenode_ip_addressso whenever we use the expressionwithin an Ansible template it will be substituted with the actual ip address that was assigned to the particular node when it was created by Vagrant.

- Configure all hosts (all of our cluster nodes in this case)

- Become root user (using sudo) before running each configuration task

- Gather facts about the server beforehand so that it does not repeat tasks that have already run in the past

- Run the tasks defined in the

ansible-java8-oraclerole folder - Run the tasks defined in the

ansible-neo4jrole folder

ansible-neo4j/defaults/main.yml This file contains variables used by all other files.

- Set neo4j_package: neo4j-enterprise since we want the Neo4j Enterprise Edition in order to use clustering

- Delete all unused variables

ansible-neo4j/tasks/install_neo4j.yml This file contains the tasks to be performed to install Neo4j

- Removed task to install Java (handled by Java role)

- Removed tasks related to user id (not working)

- Removed tasks related to Neo4j wrapper (deprecated)

- Modified tasks related to limits to use pam_limits module

ansible-neo4j/tasks/main.yml This file contains all the tasks to be performed within Neo4j role

- Removed tasks related to Neo4j wrapper (deprecated)

ansible-neo4j/tasks/install_neo4j_spatial.yml

- Deleted file (deprecated)

ansible-neo4j/handlers/main.yml This file contains tasks that are triggered in response to ‘notify’ actions called by other tasks. They will only be triggered once at the end of a ‘play’ even if notified by multiple different tasks.

- Removed tasks related to Neo4j wrapper (deprecated)

ansible-neo4j/templates/neo4j.conf This file contains all the configuration for Neo4j. The most interesting changes are:

1. Changes to paths of directories, security and upgrade settings:

[code language="ruby"] # Paths of directories in the installation. dbms.directories.data=/var/lib/neo4j/data dbms.directories.plugins=/var/lib/neo4j/plugins dbms.directories.certificates=/var/lib/neo4j/certificates dbms.directories.logs=/var/log/neo4j dbms.directories.lib=/usr/share/neo4j/lib dbms.directories.run=/var/run/neo4j dbms.directories.metrics=/var/lib/neo4j/metrics # This setting constrains all `LOAD CSV` import files to be under the `import` directory. Remove or comment it out to # allow files to be loaded from anywhere in the filesystem; this introduces possible security problems. See the # `LOAD CSV` section of the manual for details. # dbms.directories.import=/var/lib/neo4j/import dbms.directories.import=/vagrant/csv # Whether requests to Neo4j are authenticated. # To disable authentication, uncomment this line dbms.security.auth_enabled=false # Enable this to be able to upgrade a store from an older version. dbms.allow_upgrade=true [/code]2. Use of node_ip_address variable for network configuration

[code language="ruby"] #***************************************************************** # Network connector configuration #***************************************************************** # With default configuration Neo4j only accepts local connections. # To accept non-local connections, uncomment this line: dbms.connectors.default_listen_address= # You can also choose a specific network interface, and configure a non-default # port for each connector, by setting their individual listen_address. # The address at which this server can be reached by its clients. This may be the server's IP address or DNS name, or # it may be the address of a reverse proxy which sits in front of the server. This setting may be overridden for # individual connectors below. dbms.connectors.default_advertised_address= # You can also choose a specific advertised hostname or IP address, and # configure an advertised port for each connector, by setting their # individual advertised_address. # Bolt connector dbms.connector.bolt.enabled=true #dbms.connector.bolt.tls_level=OPTIONAL dbms.connector.bolt.listen_address=:7687 # HTTP Connector. There must be exactly one HTTP connector. dbms.connector.http.enabled=true dbms.connector.http.listen_address=:7474 # HTTPS Connector. There can be zero or one HTTPS connectors. dbms.connector.https.enabled=true dbms.connector.https.listen_address=:7473 [/code]3. High Availability Cluster configuration (using variables)

[code language="ruby"] #***************************************************************** # HA configuration #***************************************************************** # Uncomment and specify these lines for running Neo4j in High Availability mode. # See the High Availability documentation at https://neo4j.com/docs/ for details. # Database mode # Allowed values: # HA - High Availability # SINGLE - Single mode, default. # To run in High Availability mode uncomment this line: dbms.mode=HA # ha.server_id is the number of each instance in the HA cluster. It should be # an integer (e.g. 1), and should be unique for each cluster instance. ha.server_id= # ha.initial_hosts is a comma-separated list (without spaces) of the host:port # where the ha.host.coordination of all instances will be listening. Typically # this will be the same for all cluster instances. ha.initial_hosts= # IP and port for this instance to listen on, for communicating cluster status # information with other instances (also see ha.initial_hosts). The IP # must be the configured IP address for one of the local interfaces. ha.host.coordination=:5001 # IP and port for this instance to listen on, for communicating transaction # data with other instances (also see ha.initial_hosts). The IP # must be the configured IP address for one of the local interfaces. ha.host.data=:6001 # The interval, in seconds, at which slaves will pull updates from the master. You must comment out # the option to disable periodic pulling of updates. ha.pull_interval=10 [/code]Test your cluster

At this point, everything is in place. Now you can runvagrant up which will create and configure three Virtualbox machines which will start and join each other to form a Neo4j HA Cluster. (Note: if you have been following along and experimenting, you might want to run

vagrant destroy first in order to start clean). The cluster will be available as soon as the first machine is up and running. Every time another machine comes up, it will join the cluster and replicate the database. You can access the Neo4j GUI of each machine from your browser at:

https://192.168.3.11:7474 (neo4j-node1) https://192.168.3.12:7474 (neo4j-node2) https://192.168.3.13:7474 (neo4j-node3) A sample csv file has also been included in the

GitHub repo of this post. You can load this file using the following command in Neo4j browser: [code] LOAD CSV FROM 'file:///genres.csv' AS line CREATE (:Genre { GenreId: line[0], Name: line[1]}) [/code] You can then check the results using: [code]MATCH (n) RETURN (n)[/code] This should return 115 Genre nodes and 0 relationships. To prove to yourself that this is actually a cluster and that the data is replicated automatically, you can run the above match command on any node and you should get the same result. You can also bring down a node (eg.

vagrant halt neo4j-node2). As long as the first node is running, all the other live nodes should be responsive and in-sync. You can test each node using the match command above. If you bring a node back up (eg.

vagrant up neo4j-node2) then the node will rejoin the cluster and the latest state of the database will be replicated to this node also. Finally, you can run

:sysinfo on any node to see more information on the state of the cluster.

Conclusion

This has been a long post but we have accomplished a lot. We have set up a local Neo4j cluster in High Availability mode which accurately models a real three-server setup and also did it in a way that is easily customizable, modular, cross-platform, sharable and reproducible using tools like Vagrant and Ansible. We can resize the cluster up or down, test with different operating system versions and Neo4j configuration settings. Finally, if you exclude all the detailed explanations, the end result will be just a few files containing simple code. Although simple, this code automates a complicated task that would otherwise be slow, error-prone and difficult to share and reproduce reliably. Not too bad for a weekend’s work :) Happy experimenting with your new Neo4j cluster !!!Share this

You May Also Like

These Related Stories

Cassandra 3.9 Security feature walk-through

![]()

Cassandra 3.9 Security feature walk-through

Oct 19, 2016

15

min read

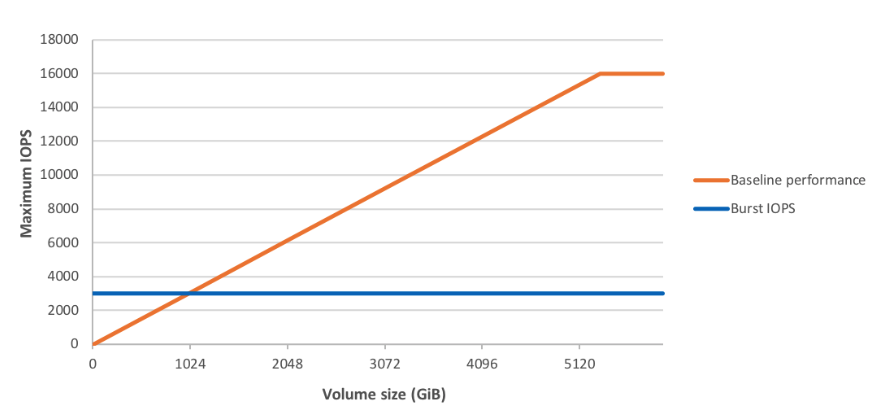

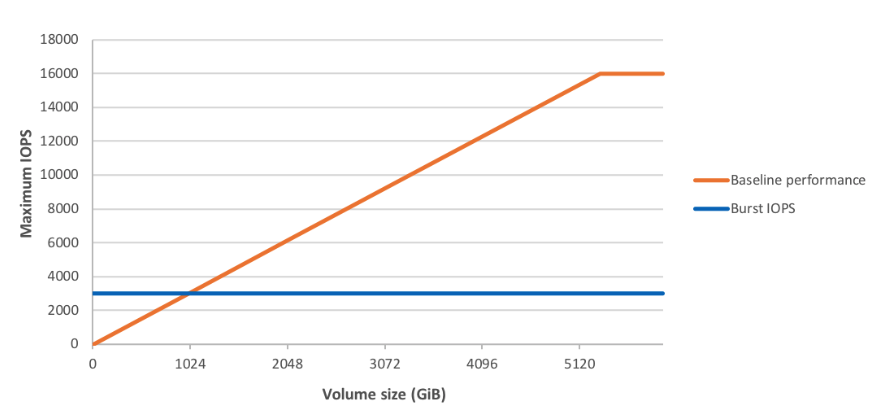

How to get the most out of your EBS performance

How to get the most out of your EBS performance

Sep 5, 2019

3

min read

How To Delete MGMTDB and MGMTLSNR Using emcli

![]()

How To Delete MGMTDB and MGMTLSNR Using emcli

Jul 17, 2019

1

min read

No Comments Yet

Let us know what you think