Essential Prerequisites for Deploying LLM Applications in Production

Yes, I know; we’ve all been bombarded with AI presentations, offerings, news, and more over the last year and a half. Even so, the conversations I’ve been having with customers tell me that people are struggling with taking this amazing new technology and putting it to do some actual work for their organization.

Many folks are considering deploying a production application that leverages Large Language Models (LLMs), but like any cutting-edge technology, if you do so, you're in for an exciting, winding journey.

Before you dive in, there are some crucial technical prerequisites you need to have in place. As we have begun implementing Generative AI applications within our customer base, the prerequisites for a successful project have become clearer, and being prepared for these will save a lot of pain down the road. Let's break down these prerequisites and why they matter.

Data quality and governance

The old phrase of "garbage in, garbage out" that has applied to analytics and ML still definitely applies to Generative AI. LLMs, especially in Retrieval-Augmented Generation (RAG) setups, are only as good as the data you feed them. Whether it’s knowledge base documents or doing more sophisticated configurations like generating SQL to retrieve actual database records, if the data is not trustworthy, it will not matter how good the LLM is.

A poor corpus of data will lead to inaccurate outputs, biased responses, or even security vulnerabilities. You need to ensure that your LLM has access to reliable, up-to-date information and that your retrieval and prompt engineering inputs are of high quality.

Recommendations:

- Data quality processes for both structured and semi-structured data (documents)

- Processes for keeping the RAG indexes relevant and updated

- Regular monitoring of the application output to make sure quality is not degrading

Infrastructure scalability

LLMs often require significant compute power, and for many applications, the user demand can fluctuate a lot. Without scalable infrastructure, your application may become slow or unresponsive during high-traffic periods or you might end up overprovisioning and spending way more money than necessary.

This is a prerequisite where the cloud can be the best solution. Most hyperscalers like Azure or Google Cloud are offering “LLM as a Service” which will allow you to consume resources on demand based on the tokens processed per minute. Once your application’s resource requirements are clearer, you can move into a reserved capacity model where you pay for a fixed amount of compute, but you get it at a discounted rate.

Another advantage of deploying in the cloud is that hyperscalers will also have services that will help you round out your solution in terms of high availability, caching layers, load balancers, etc.

Recommendations:

- Invest in cloud-based solutions with auto-scaling capabilities

- Start with on-demand and move to reservations once you understand your application patterns

- LLM compute is not the only infrastructure you will need; look into the scalability of your API layer, caches, load balancers, etc.

Model selection and fine-tuning

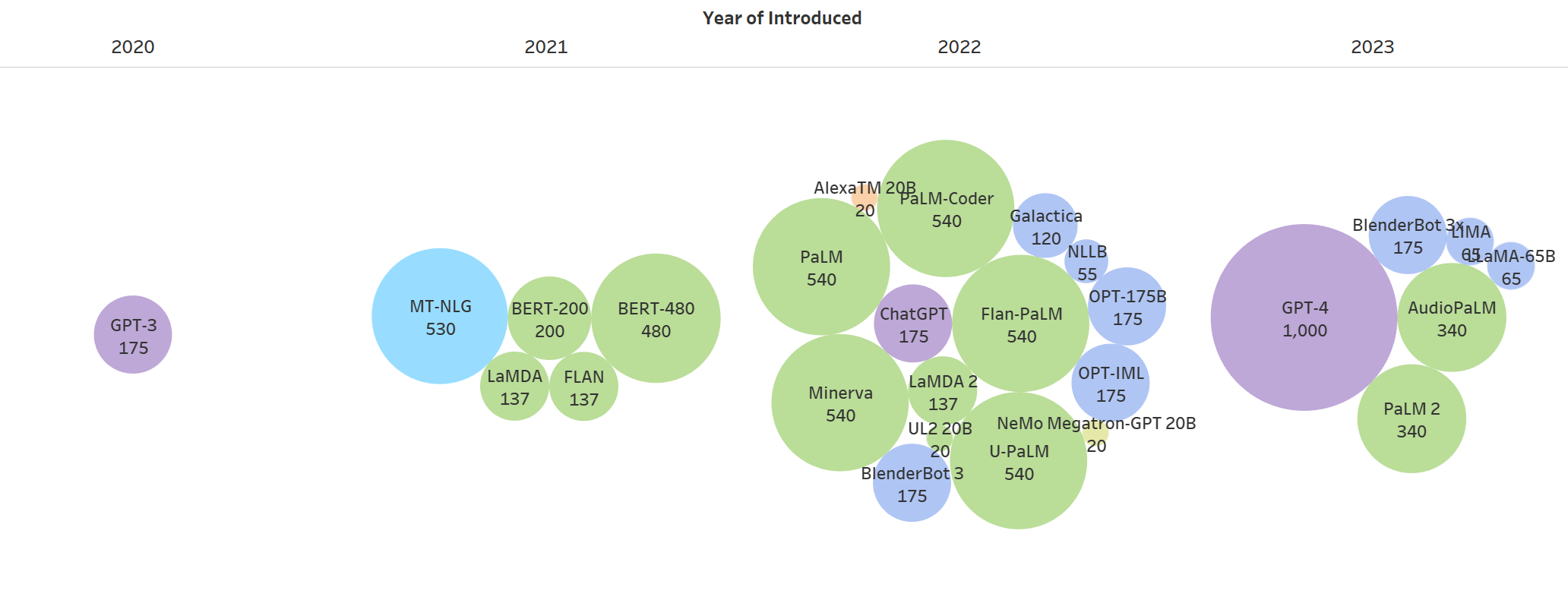

Not all LLMs are created equal, and the competition for the top spot is fierce. Every month vendors are deploying new versions or enabling new features on their top models like GPT on Azure OpenAI or Gemini on Google Cloud. You have to at least consider the published benchmarks, multi-modal capabilities, and context window length.

Not all LLMs are created equal, and the competition for the top spot is fierce. Every month vendors are deploying new versions or enabling new features on their top models like GPT on Azure OpenAI or Gemini on Google Cloud. You have to at least consider the published benchmarks, multi-modal capabilities, and context window length.

Choosing the right model for your specific use case can make a world of difference if you apply the right prompt engineering, and system prompting even without fine-tuning. These days, most of the time the fine-tuning is not needed unless you have exhausted all avenues from prompt engineering and properly labeled examples and still not getting the results you want.

A poorly prompted or poorly tuned model may produce irrelevant or inaccurate results, consume unnecessary resources, or fail to meet the specific needs of your application. This can lead to projects failing because they believe AI can’t do what they require, but often it’s the process of interacting with the model that is the issue.

Recommendations:

- Evaluate multiple models based on your specific needs, multi-modal capabilities, context window length

- Evaluate prompting and examples before investing in fine-tuning

- If struggling, consider whether the issue is model selection or the interaction with the model

Prompt engineering and management

Prompts are the interface between your application and the LLM. The difference between a good one and a bad one boils down to whether you are getting the expected outputs in a predictable, repeatable way. If your application requires the model to exhibit creativity, then you want to make sure your prompts define the proper guardrails that the model must stick to as well. Slight differences in prompts can produce different outputs, so it’s critical to bake this into your testing processes.

It’s also very important to understand the role of the system prompts, passing in examples, the wording, and techniques for building prompts and the hyperparameters like temperature or top p. Finally, you should treat the prompts and parameters as actual software artifacts, make sure you source control them and move them through development, test, and deployment using your regular software quality assurance and workflow patterns.

Recommendations:

- Create a source-controlled prompt library with related hyperparameters

- Integrate your changes in prompts to your CI/CD processes just like any other software artifact

- Utilize techniques like A/B testing to compare the quality and performance of similar prompts

Monitoring and observability

Even though work has been happening to reduce hallucinations and prompt techniques have emerged to decrease these issues, LLMs can still sometimes produce unexpected or undesired outputs. Without proper monitoring, these issues could go unnoticed, potentially damaging user trust, and your company’s reputation or causing other problems if you are using LLM outputs to do things like function calling, for example. If doing more complex LLM orchestration, like chaining LLM calls or building prompts from multiple sources, having extensive debugging is a must for proper troubleshooting.

You also need to monitor your LLM operations for classic application metrics like uptime, response time, how many tokens are being consumed per minute, cost, and amount of user metrics.

Recommendations:

- Implement comprehensive logging to aid your troubleshooting

- Set up alerting for anomalies on LLM output

- Continue monitoring for classic metrics like uptime, users, and resource consumption

Ethical considerations and bias mitigation

LLMs can inadvertently perpetuate or amplify biases present in their training data, leading to unfair or discriminatory outputs. The main vendors like Microsoft, OpenAI, and Google do a lot of work to prevent this situation, but it can still happen. Once you introduce your own examples, fine-tuning or setting up an RAG process, then your data is the one that can lead to the model’s own biases.

LLMs can inadvertently perpetuate or amplify biases present in their training data, leading to unfair or discriminatory outputs. The main vendors like Microsoft, OpenAI, and Google do a lot of work to prevent this situation, but it can still happen. Once you introduce your own examples, fine-tuning or setting up an RAG process, then your data is the one that can lead to the model’s own biases.

Going forward without any regard for the ethical features of your model's output and exhibited biases can result in reputational damage, legal issues, or direct harm to users. Addressing ethical considerations and implementing bias mitigation strategies is crucial for ensuring your application is fair and trustworthy, but it also makes economic sense to the business to avoid these related issues.

If a company is serious about the adoption of Generative AI, then it should establish an ethics review team from a mix of staff in the business, legal, infoSec, devs, and IT that can execute the mandate of reviewing the model's safety filters, vendor guarantees, the data introduced to the model and regularly verify that the model is still acting within the expected guidelines.

Recommendations:

- Conduct regular bias audits and content safety filter reviews

- Implement fairness metrics through scheduled model evaluations

- Establish an ethics review team to execute this mandate

Conclusion

Deploying LLM applications in production is getting easier every day, but there are still important prerequisites you need to cover to set yourself up for success. Much like the rest of modern software engineering, It is an iterative and continuous process where you'll likely need to revisit and refine these areas. However, getting a good handle on these processes and allowing your development teams to mature their use of the technology will give you a competitive advantage over your competitors who are still deciding whether to move forward or not.

Share this

You May Also Like

These Related Stories

Reimagining Marketing Strategy with Generative AI

Harnessing the Power of GenAI: Advancements, Applications, and Strategies

No Comments Yet

Let us know what you think